Lambda raised a $320M Series C for a $1.5B valuation, to expand our GPU cloud & further our mission to build the #1 AI compute platform in the world.

The Lambda Deep Learning Blog

Categories

- gpu-cloud (25)

- tutorials (24)

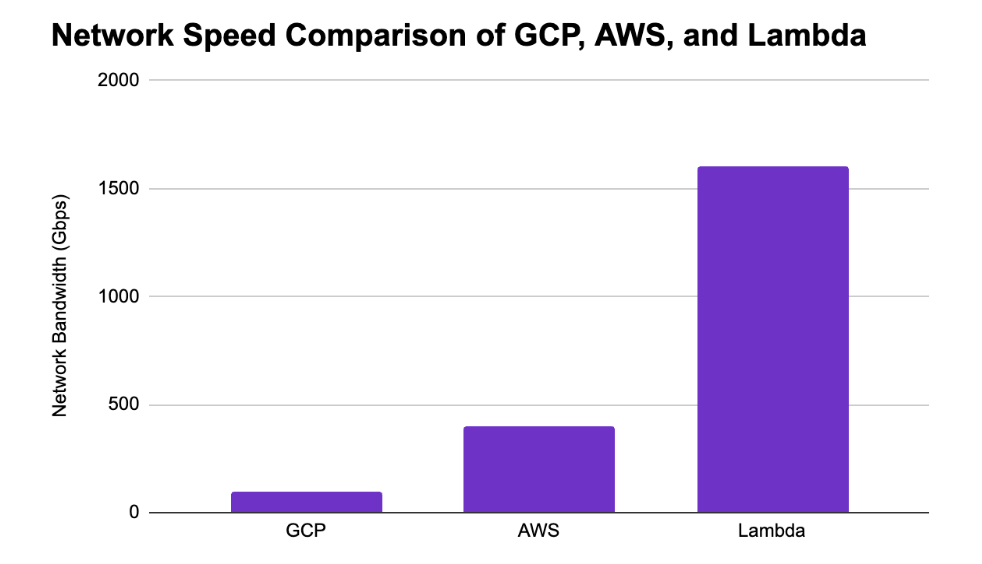

- benchmarks (22)

- announcements (19)

- lambda cloud (13)

- NVIDIA H100 (12)

- hardware (12)

- tensorflow (9)

- NVIDIA A100 (8)

- gpus (8)

- company (7)

- LLMs (6)

- deep learning (6)

- hyperplane (6)

- news (6)

- training (6)

- gpu clusters (5)

- CNNs (4)

- generative networks (4)

- presentation (4)

- research (4)

- rtx a6000 (4)

Recent Posts

Persistent storage is now available in all Lambda Cloud regions and for all on-demand instance types, including our NVIDIA H100 Tensor Core GPU instances.

Published 12/19/2023 by Kathy Bui

Persistent storage for Lambda Cloud is expanding. Filesystems are now available for all regions except Utah, which is coming very soon.

Published 09/20/2023 by Kathy Bui

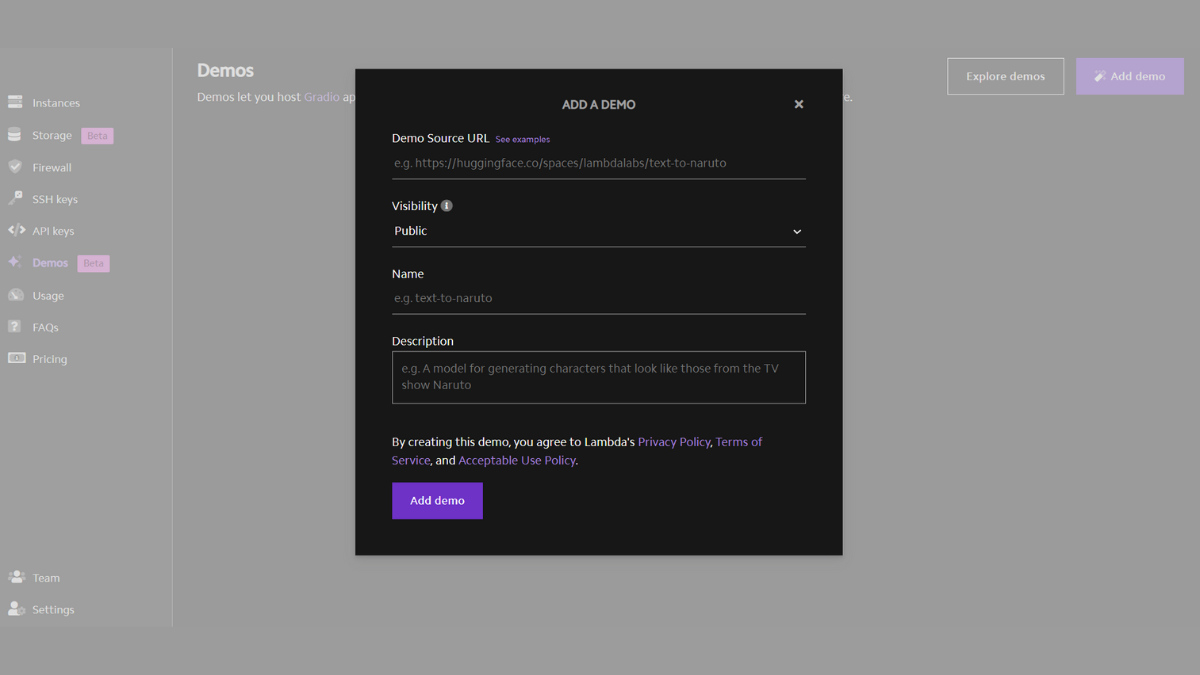

Lambda Demos streamlines the process of hosting your own machine learning demos. Host a Gradio app using your existing repository URL in just a few clicks.

Published 05/24/2023 by Kathy Bui

Tired of waiting in a queue to try out Stable Diffusion or another ML app? Lambda GPU Cloud’s Demos feature makes it easy to host your own ML apps.

Published 05/18/2023 by Cody Brownstein

Lambda secured a $44 million Series B to accelerate the growth of our AI cloud. Funds will be used to deploy new H100 GPU capacity with high-speed network interconnects and develop features that will make Lambda the best cloud in the world for training AI.

Published 03/21/2023 by Stephen Balaban

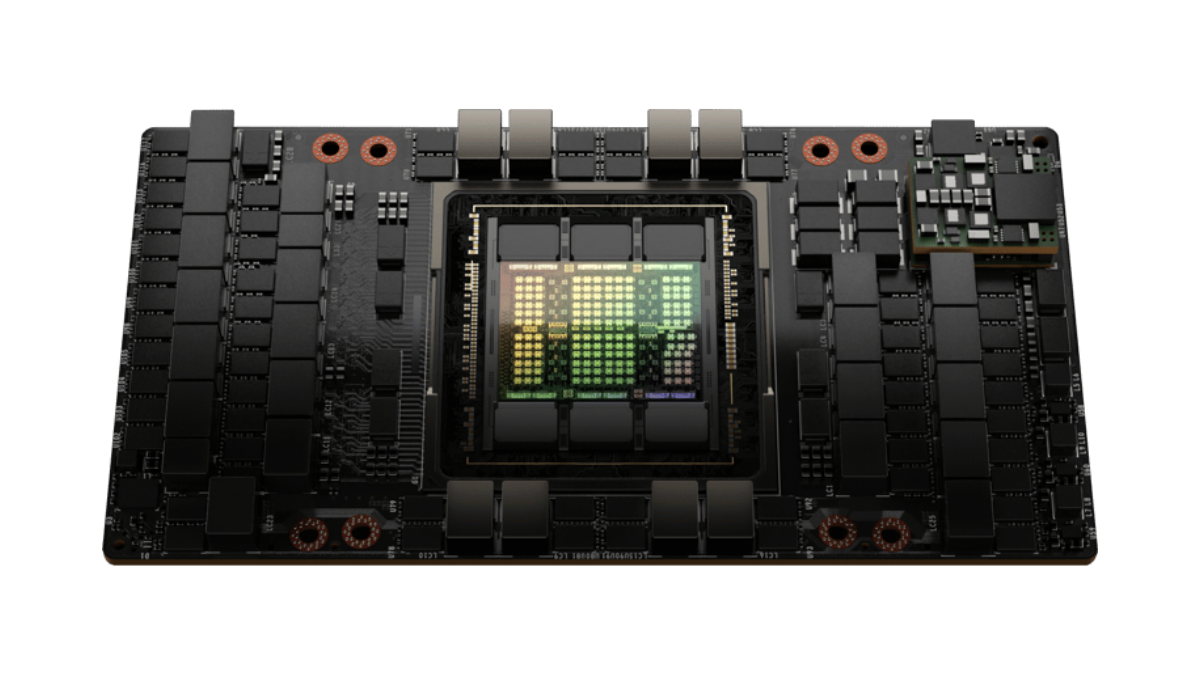

In early April, NVIDIA H100 Tensor Core GPUs, the fastest GPU type on the market, will be added to Lambda Cloud. NVIDIA H100 80GB PCIe Gen5 instances will go live first, with SXM to follow very shortly after.

Published 03/21/2023 by Mitesh Agrawal

Learn how to use mpirun to launch a LLaMA inference job across multiple cloud instances if you do not have a multi-GPU workstation or server.

Published 03/14/2023 by Chuan Li

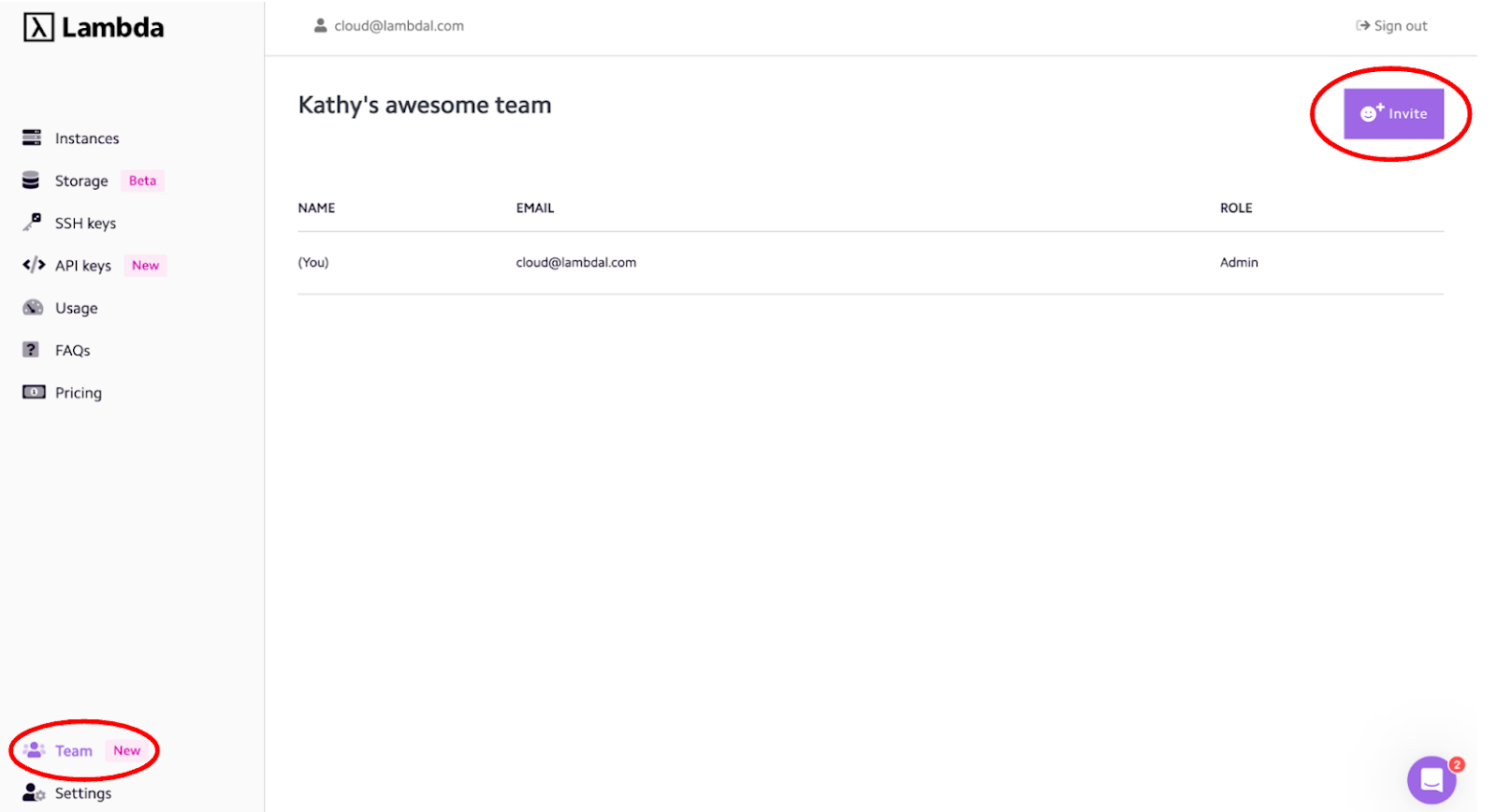

Lambda's GPU cloud has a new team feature that allows you to invite your team to join your account for easy collaboration and more.

Published 01/13/2023 by Kathy Bui

Lambda and Hugging Face are collaborating on a 2-week sprint to fine-tune OpenAI's Whisper model in as many languages as possible.

Published 12/01/2022 by Chuan Li

This blog walks through how to fine tune stable diffusion to create a text-to-naruto character model, emphasizing the importance of prompt engineering.

Published 11/02/2022 by Eole Cervenka

In this blog, we will outline the benefits of our new Reserved Cloud Cluster and an example of how Voltron Data is using it to work with large datasets.

Published 11/01/2022 by Lauren Watkins

In this blog post, we go over the most recent updates we made to Lambda on-demand GPU cloud in September, 2022.

Published 10/11/2022 by Cody Brownstein