Lambda Cloud Adding NVIDIA H100 Tensor Core GPUs in Early April

Lambda has some exciting news to share around the arrival of NVIDIA H100 Tensor Core GPUs. In early April, Lambda will add this powerful, high-performance instance type to our fleet to offer our customers on-demand access to the fastest GPU type on the market. NVIDIA H100 80GB PCIe Gen5 instances will go live first, with SXM to follow very shortly after.

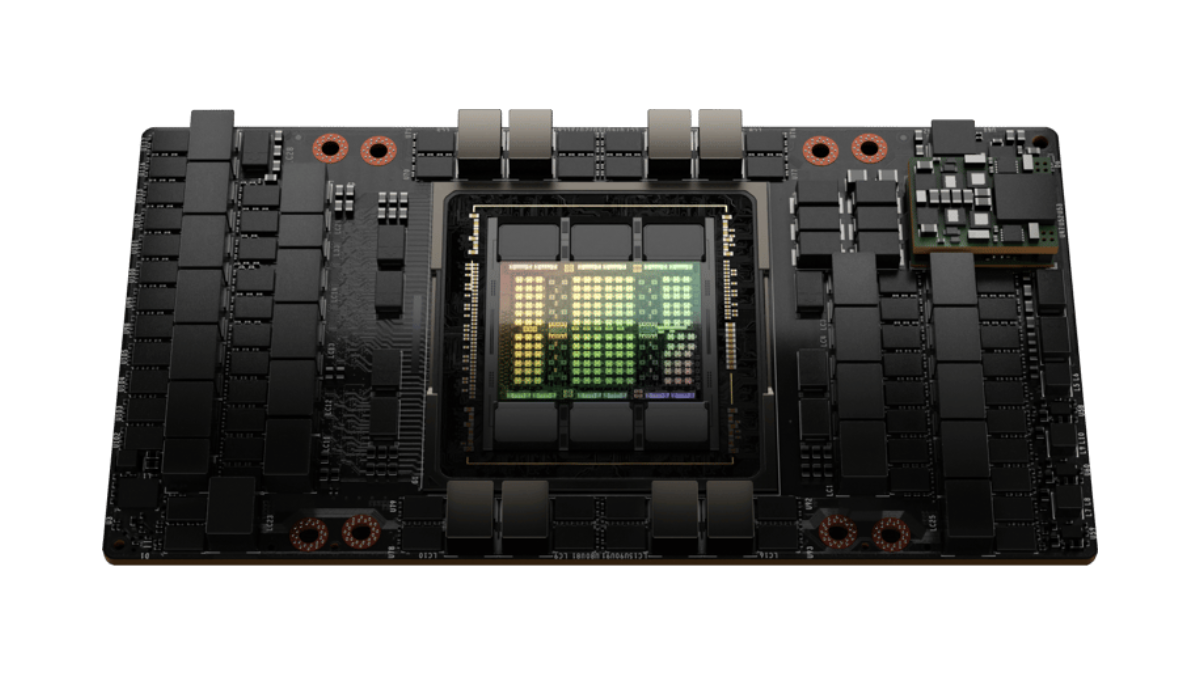

NVIDIA H100 GPUs enable an order-of-magnitude leap for compute-intensive workloads like large-scale AI training, high-performance computing (HPC) and real-time inference for leading-edge generative AI and large language models (LLMs). Featuring fourth-generation Tensor Cores, Transformer Engine with FP8 precision and second-generation Multi-Instance GPU technology, the NVIDIA H100 delivers faster training and inference speedup on LLMs.

To accelerate the path to production AI on Lambda Cloud, customers can leverage NVIDIA AI Enterprise, an end-to-end, cloud-native suite of AI software, AI solution workflows, frameworks and pretrained models that optimizes development and deployment of AI-enabled applications on NVIDIA H100 GPUs.

Our collaboration with NVIDIA enables us to be one of the first to market with NVIDIA H100 GPUs. As enterprises continue to turn to cloud-first AI strategies, Lambda is able to support them and drive long-term success across business environments through our longstanding work with NVIDIA.

“As the number one computing platform for training generative AI models and LLMs, Lambda is excited about the launch of NVIDIA H100 GPUs on Lambda Cloud coming in early April,” shared Lambda’s Head of Cloud Mitesh Agrawal. “Whether ML teams train in a private or public cloud, Lambda offers a plug-and-play experience complete with the latest NVIDIA H100 and A100 Tensor Core GPUs, a complete ML software stack and technical support from ML and system engineers.”

“With the continued growth of generative AI and large language models, NVIDIA H100 Tensor Core GPUs enable faster training and more efficient inference for cutting-edge AI applications,” said Dion Harris, head of accelerated computing product solutions, NVIDIA. “We're thrilled to see Lambda harnessing the power of NVIDIA’s technology to drive advancements in AI, and we look forward to the positive impact this collaboration will have on the industry as a whole.”

The addition of NVIDIA H100 PCIe GPUs to Lambda Cloud will be followed closely by the release of NVIDIA H100 SXM GPUs. If you’re working with large Transformer models that involve structured sparsity and large-scale distributed workloads, it’s time to start thinking about upgrading to the NVIDIA H100.

Stay tuned for more information coming from us very soon.