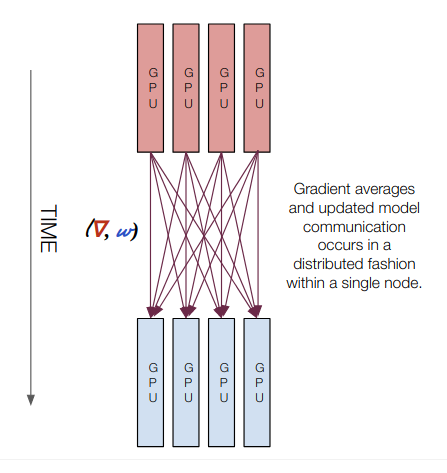

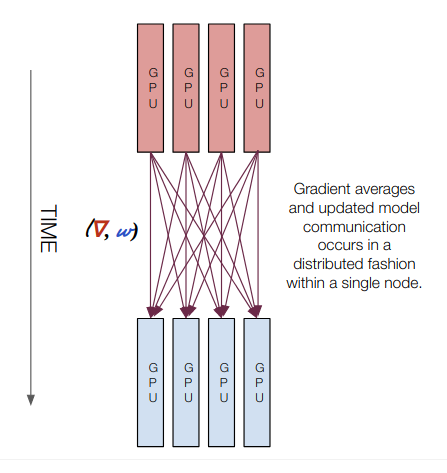

A Gentle Introduction to Multi GPU and Multi Node Distributed Training

This presentation is a high-level overview of the different types of training regimes that you'll encounter as you move from single GPU to multi GPU to multi ...

This presentation is a high-level overview of the different types of training regimes that you'll encounter as you move from single GPU to multi GPU to multi ...

Published on by Stephen Balaban

Update June 5th 2020: OpenAI has announced a successor to GPT-2 in a newly published paper. Checkout our GPT-3 model overview.

Published on by Stephen Balaban

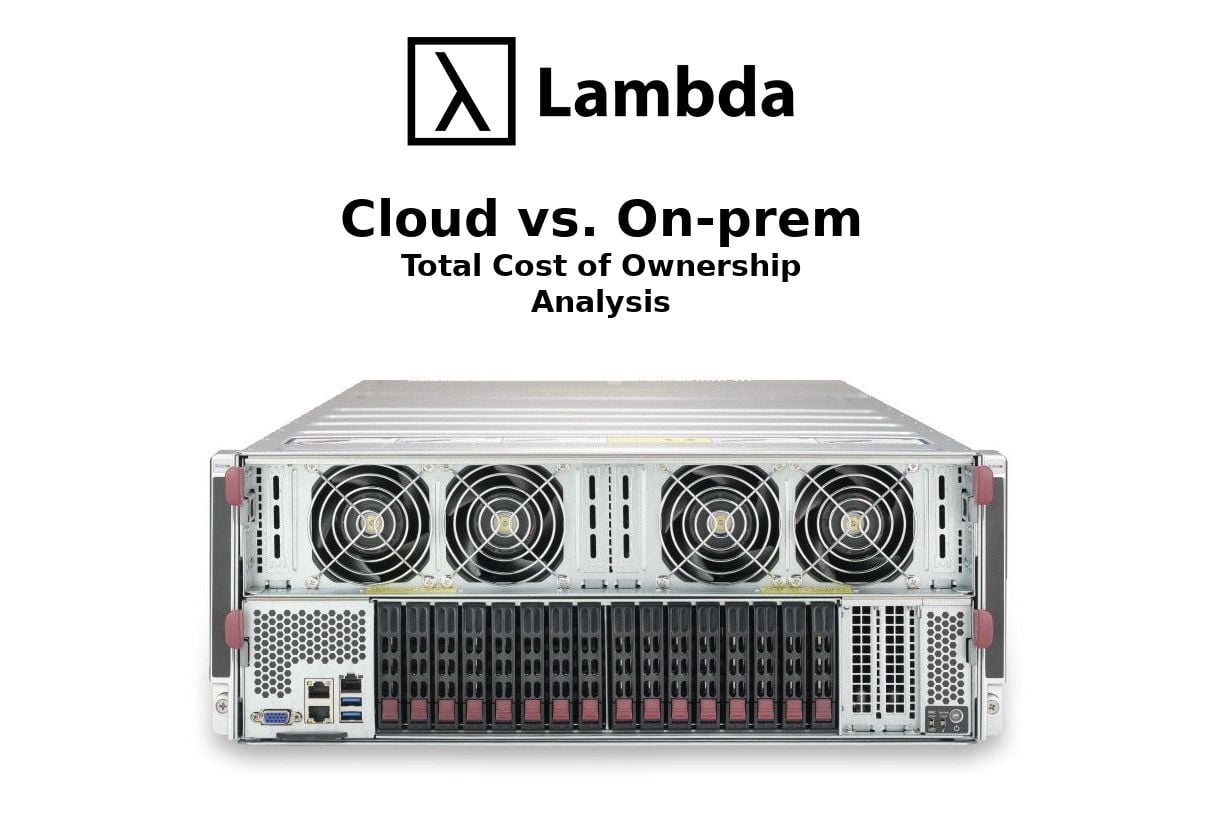

Deep Learning requires GPUs, which are very expensive to rent in the cloud. In this post, we compare the cost of buying vs. renting a cloud GPU server. We use ...

Published on by Chuan Li

Create a cloud account instantly to spin up GPUs today or contact us to secure a long-term contract for thousands of GPUs