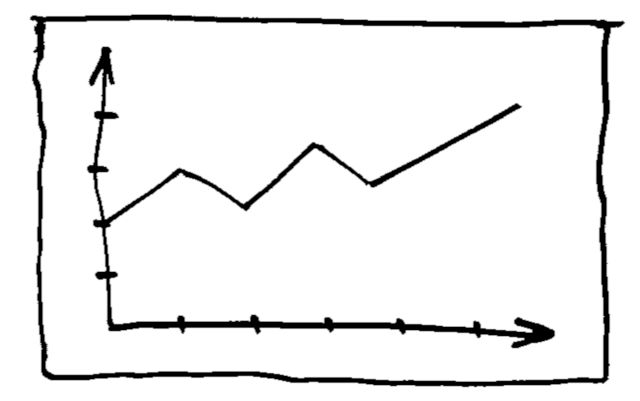

A cost and speed comparison between the Lambda Hyperplane 8 V100 GPU Server and AWS p3 GPU instances. A very similar comparison to the DGX-1.

The Lambda Deep Learning Blog

Subscribe

Categories

- gpu-cloud (26)

- tutorials (24)

- benchmarks (22)

- announcements (20)

- NVIDIA H100 (13)

- lambda cloud (13)

- hardware (12)

- tensorflow (9)

- NVIDIA A100 (8)

- gpus (8)

- company (7)

- LLMs (6)

- deep learning (6)

- gpu clusters (6)

- hyperplane (6)

- news (6)

- training (6)

- CNNs (4)

- generative networks (4)

- presentation (4)

- research (4)

- rtx a6000 (4)

Recent posts

We reproduce the latest Fast.ai/DIUx's ImageNet result with a single 8 Turing GPUs (Titan RTX) server. It takes 2.36 hours to achieve 93% Top-5 accuracy.

Published 01/15/2019 by Chuan Li

This blog tests how fast does ResNet9 (the fastest way to train a SOTA image classifier on Cifar10) run on Nvidia's Turing GPUs, including 2080 Ti and Titan RTX. We also include 1080 Ti as the baseline for comparison.

Published 01/07/2019 by Chuan Li

...