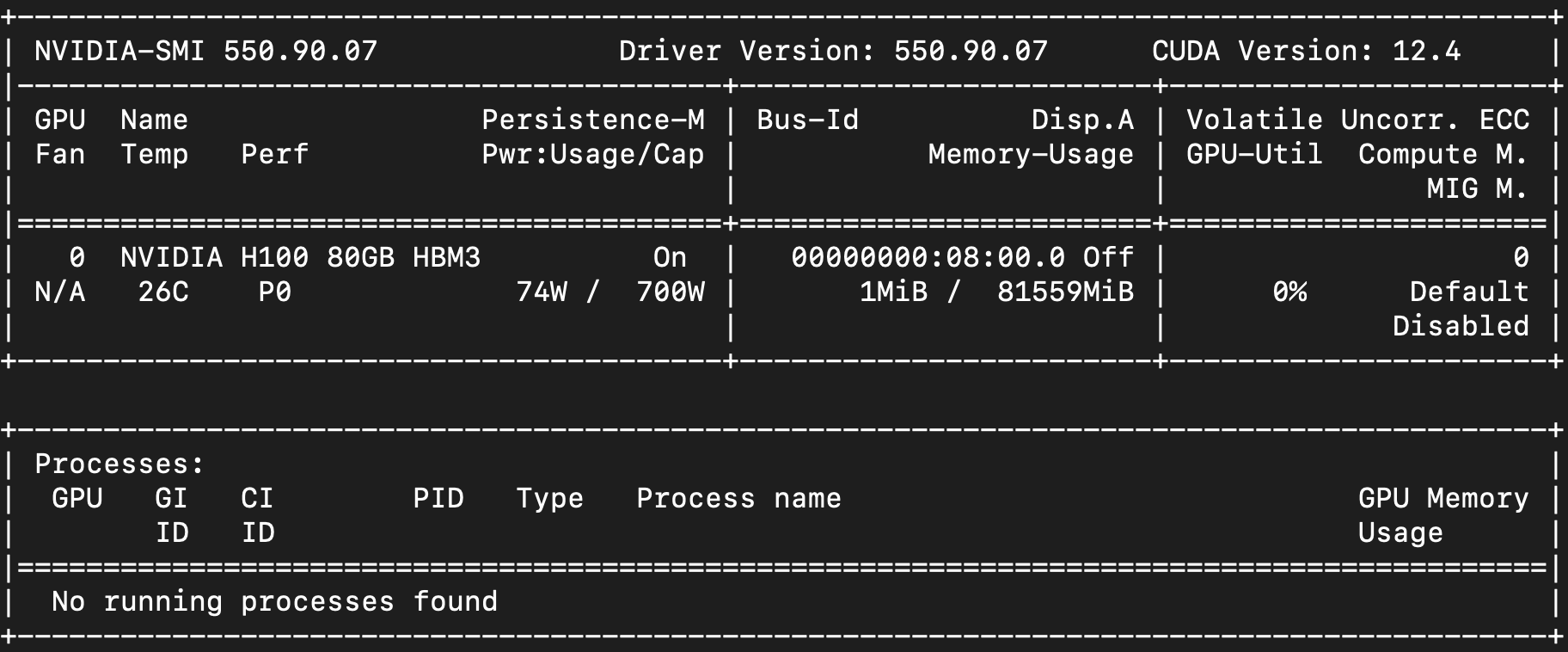

More Options for AI Developers: New On-Demand 1x, 2x and 4x NVIDIA H100 SXM Tensor Core GPU Instances in Lambda’s Cloud

Opening up options: higher-end GPUs in smaller chunks We're excited to announce the launch of new 1x, 2x, and 4x NVIDIA H100 SXM Tensor Core GPU instances in ...