Chat with a PDF using Falcon: Unleashing the Power of Open-Source LLMs!

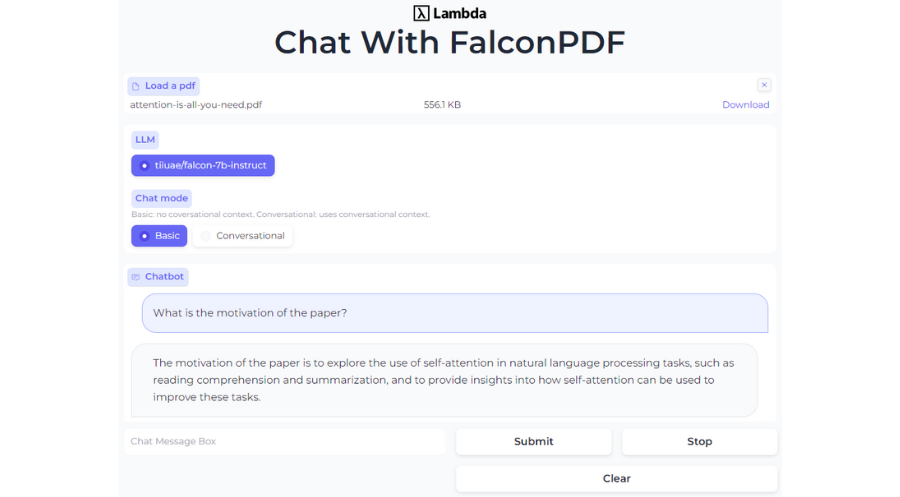

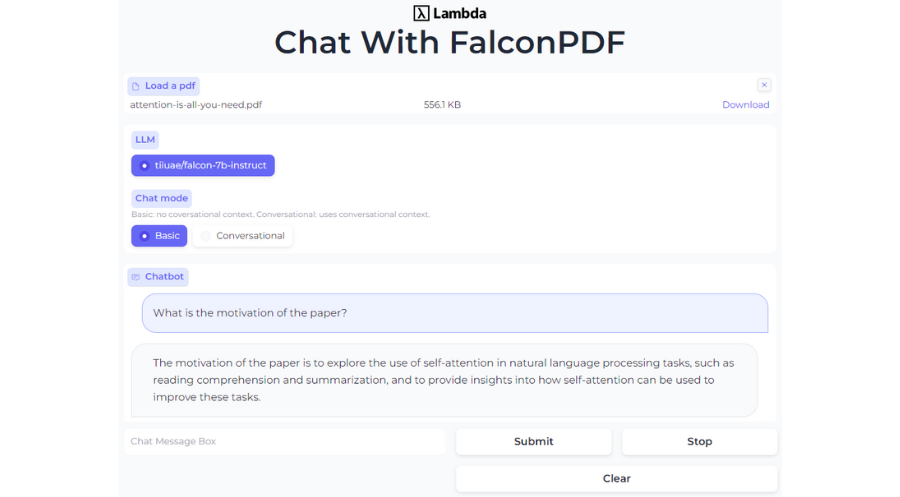

Unlock the potential of open-source LLMs by hosting your very own langchain+Falcon+Chroma application! Now, you can upload a PDF and engage in captivating ...

Unlock the potential of open-source LLMs by hosting your very own langchain+Falcon+Chroma application! Now, you can upload a PDF and engage in captivating ...

Published on by Xi Tian

This guide walks you through how to fine-tune Falcon LLM 7B/40B on a single GPU with LoRA and quantization, enabling data parallelism for linear scaling across ...

Published on by Xi Tian

Create a cloud account instantly to spin up GPUs today or contact us to secure a long-term contract for thousands of GPUs