Persistent storage for Lambda Cloud is expanding. Filesystems are now available for all regions except Utah, which is coming very soon.

The Lambda Deep Learning Blog

Categories

- gpu-cloud (25)

- tutorials (24)

- benchmarks (22)

- announcements (19)

- lambda cloud (13)

- NVIDIA H100 (12)

- hardware (12)

- tensorflow (9)

- NVIDIA A100 (8)

- gpus (8)

- company (7)

- LLMs (6)

- deep learning (6)

- hyperplane (6)

- news (6)

- training (6)

- gpu clusters (5)

- CNNs (4)

- generative networks (4)

- presentation (4)

- research (4)

- rtx a6000 (4)

Recent Posts

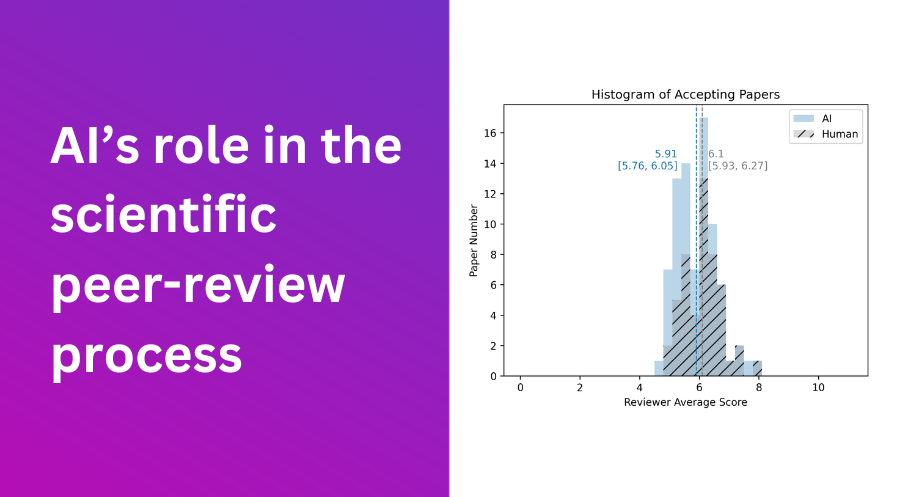

This analysis explores whether a Large Language Model, such as OpenAI's GPT, can assist in helping human readers digest scientific reviews.

Published 09/14/2023 by Xi Tian

Lambda has launched a new Hyperplane server combining the fastest GPU on the market, NVIDIA H100, with the world’s best data center CPU, AMD EPYC 9004.

Published 09/07/2023 by Maxx Garrison

How to use FlashAttention-2 on Lambda Cloud, including H100 vs A100 benchmark results for training GPT-3-style models using the new model.

Published 08/24/2023 by Chuan Li

On-demand HGX H100 systems with 8x NVIDIA H100 SXM instances are now available on Lambda Cloud for only $2.59/hr/GPU.

Published 08/02/2023 by Kathy Bui

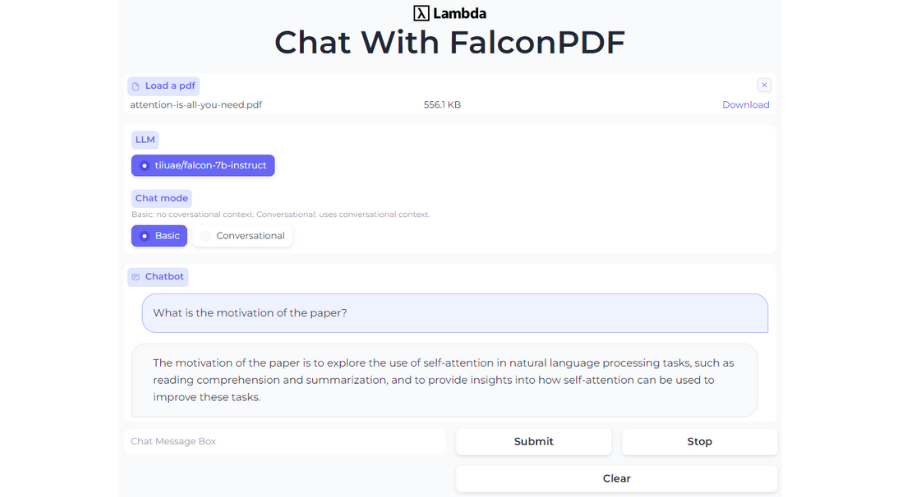

Unlock the potential of open-source LLMs by hosting your very own langchain+Falcon+Chroma application. Upload a PDF and engage in Q&A about its contents.

Published 07/24/2023 by Xi Tian

This blog post provides instructions on how to fine tune LLaMA 2 models on Lambda Cloud using a $0.60/hr A10 GPU.

Published 07/20/2023 by Corey Lowman

How to build the GPU infrastructure needed to pretrain LLM and Generative AI models from scratch (e.g. GPT-4, LaMDA, LLaMA, BLOOM).

Published 07/13/2023 by David Hall

Learn how to fine-tune Falcon LLM 7B/40B on a single GPU with LoRA and quantization, enabling data parallelism for linear scaling across multiple GPUs.

Published 06/29/2023 by Xi Tian

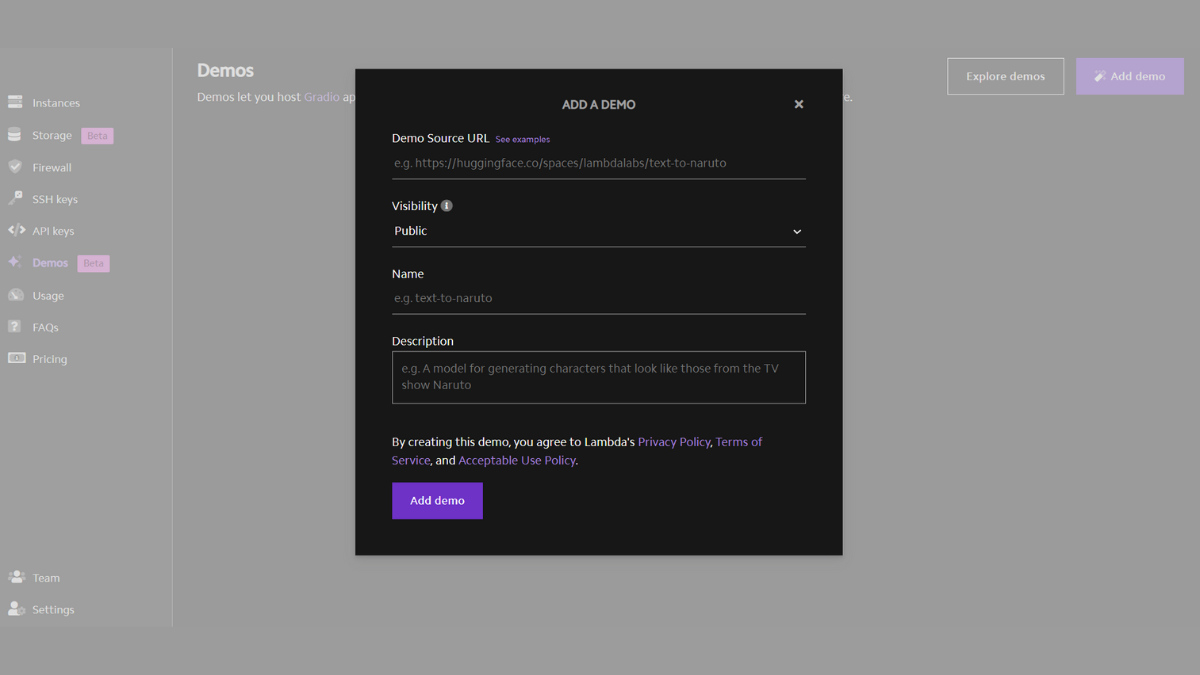

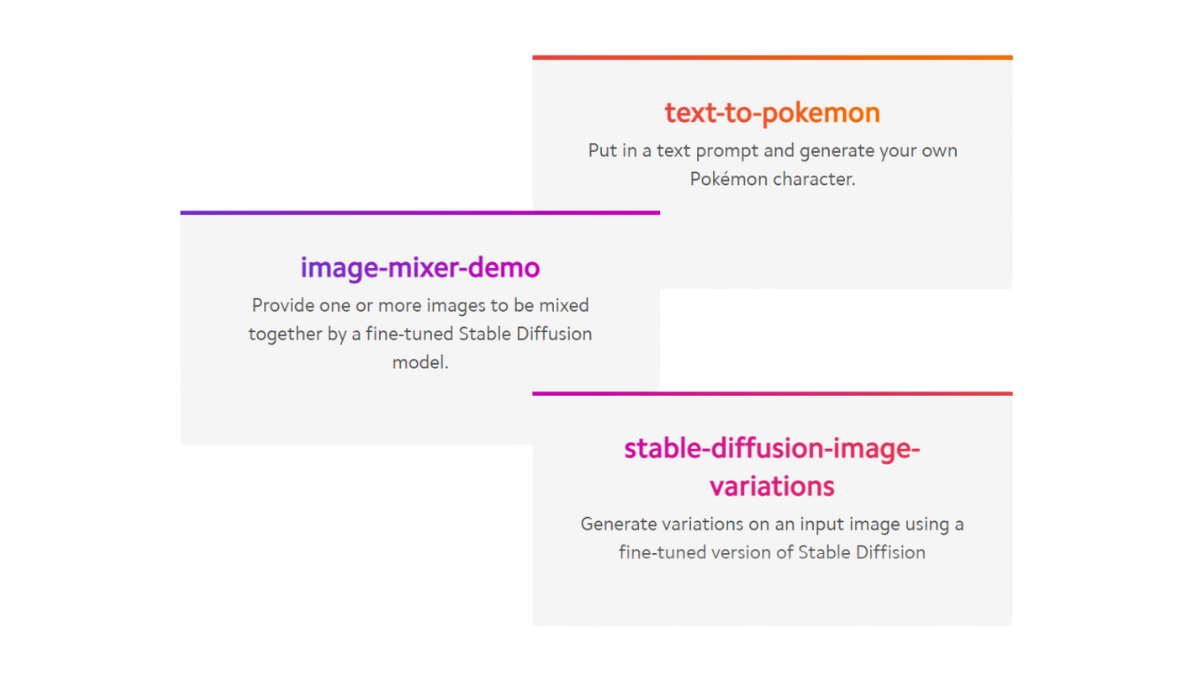

Lambda Demos streamlines the process of hosting your own machine learning demos. Host a Gradio app using your existing repository URL in just a few clicks.

Published 05/24/2023 by Kathy Bui

Tired of waiting in a queue to try out Stable Diffusion or another ML app? Lambda GPU Cloud’s Demos feature makes it easy to host your own ML apps.

Published 05/18/2023 by Cody Brownstein

Lambda Cloud has deployed a fleet of NVIDIA H100 Tensor Core GPUs, making it one of the FIRST to market with general-availability, on-demand H100 GPUs. The high-performance GPUs enable faster training times, better model accuracy, and increased productivity.

Published 05/10/2023 by Kathy Bui

For the third consecutive year, Lambda has been chosen as NVIDIA Partner Network (NPN) Solution Integration Partner of the Year.

Published 04/04/2023 by Jaimie Renner