This tutorial will walk you through how to setup a working environment for multi-GPU training with Horovod and Keras.

The Lambda Deep Learning Blog

Subscribe

Categories

- gpu-cloud (26)

- tutorials (24)

- benchmarks (22)

- announcements (20)

- NVIDIA H100 (13)

- lambda cloud (13)

- hardware (12)

- tensorflow (9)

- NVIDIA A100 (8)

- gpus (8)

- company (7)

- LLMs (6)

- deep learning (6)

- gpu clusters (6)

- hyperplane (6)

- news (6)

- training (6)

- CNNs (4)

- generative networks (4)

- presentation (4)

- research (4)

- rtx a6000 (4)

Recent posts

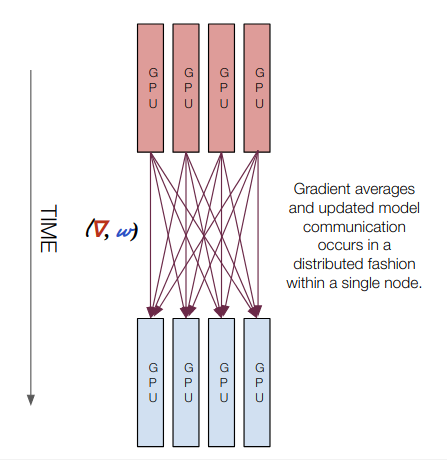

This presentation is a high-level overview of the different types of training regimes you'll encounter as you move from single GPU to multi GPU to multi node distributed training. It describes where the computation happens, how the gradients are communicated, and how the models are updated and communicated.

Published 05/31/2019 by Stephen Balaban

BERT is Google's SOTA pre-training language representations. This blog is about running BERT with multiple GPUs. Specifically, we will use the Horovod framework to parrallelize the tasks. We ill list all the changes to the original BERT implementation and highlight a few places that will make or break the performance.

Published 02/06/2019 by Chuan Li