Lambda Cloud Clusters now available with NVIDIA GH200 Grace Hopper Superchip

Lambda Cloud Clusters are now available with the NVIDIA GH200 Grace Hopper Superchip, starting at $5.99/hr. Lambda Cloud Clusters are dedicated NVIDIA GPU clusters optimized for large-scale LLM training, allowing customers to train models across thousands of GPUs with no delays or bottlenecks.

Lambda Cloud Clusters powered by NVIDIA GH200 and NVIDIA Quantum-2 Networking

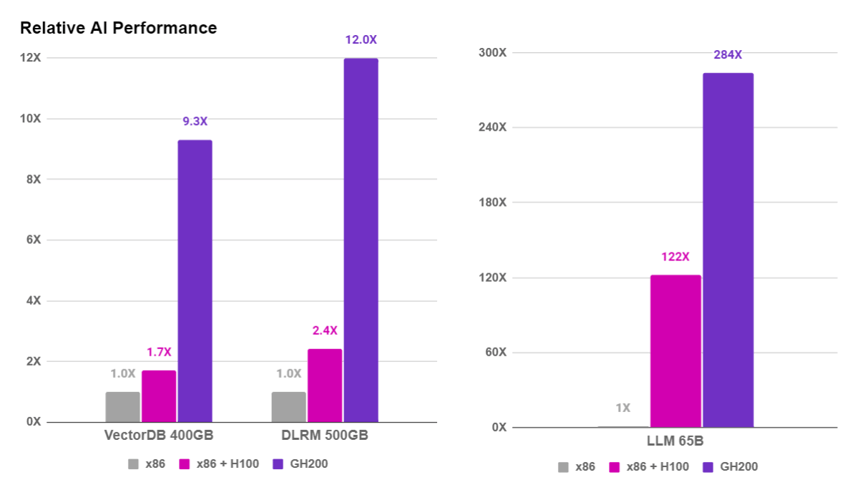

The GH200’s breakthrough design forms a high-bandwidth connection between the NVIDIA Grace CPU and the integrated NVIDIA H100 Tensor Core GPU to enable the era of accelerated computing and generative AI. The GH200 delivers up to 10X higher performance for applications running terabytes of data, helping scientists and researchers reach unprecedented solutions for the world’s most complex problems.

The GH200’s coherent memory architecture enables the H100 GPU to address both GPU and system memory, a total of 576GB, allowing the GH200 to offer unmatched efficiency and price for its memory footprint. Now, Lambda customers can access the latest infrastructure, built for the next generation of LLMs and other large-scale models.

Lambda’s Cloud Cluster compute fabric leverages non-blocking NVIDIA Quantum-2 400 Gb/s InfiniBand networking, providing high throughput, low latency, and support for NVIDIA GPUDirect RDMA across the entire cluster. NVIDIA Quantum-2 InfiniBand delivers the fastest data transfers between all GH200 Superchips in the cluster.

Key features of NVIDIA GH200

The NVIDIA GH200 offers ML teams fast access to a large pool of memory and a tight coupling of the CPU and GPU. The unique design enables scalability for large-scale AI, accelerating applications like training and inference on LLMs and letting customers take on larger datasets, more complex models, and new workloads. Key features include:

- 72-core NVIDIA Grace CPU

- NVIDIA H100 Tensor Core GPU

- 480GB of LPDDR5X system memory

- 96GB of HBM3 GPU memory

- NVLink-C2C: 900GB/s between the Grace CPU and integrated H100 GPU

NVIDIA GH200 Performance

The NVIDIA GH200 Grace Hopper Superchip pairs the most powerful GPU in the world, the NVIDIA H100 Tensor Core GPU, with the most versatile CPU, the NVIDIA Grace CPU, into a single superchip. The coherent NVIDIA NVLink-C2C interconnect provides 900 gigabytes per second (GB/s) of bidirectional bandwidth between the Grace CPU and Hopper GPU, delivering 7X higher memory bandwidth to the GPU compared to today’s fastest servers with PCIe Gen 5.

How to Deploy NVIDIA GH200 with Lambda Cloud

Lambda collaborated with NVIDIA to launch one of the first GH200 cloud clusters in the world. Lambda Cloud Clusters, powered by NVIDIA GH200 Superchips, are already being deployed, with limited availability. Reach out to us to become one of the first in the industry to train LLMs on the NVIDIA GH200.