Lambda Echelon – a turn key GPU cluster for your ML team

Introducing the Lambda Echelon Lambda Echelon is a GPU cluster designed for AI. It comes with the compute, storage, network, power, and support you need to ...

Introducing the Lambda Echelon Lambda Echelon is a GPU cluster designed for AI. It comes with the compute, storage, network, power, and support you need to ...

Published on by Stephen Balaban

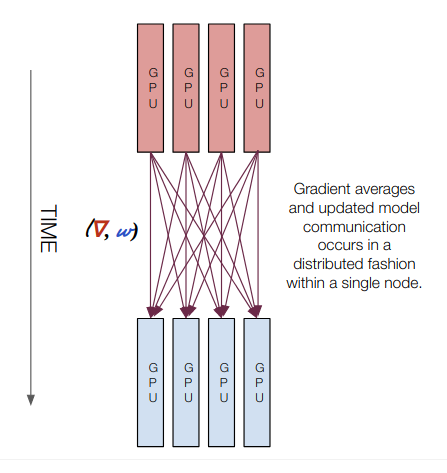

This presentation is a high-level overview of the different types of training regimes that you'll encounter as you move from single GPU to multi GPU to multi ...

Published on by Stephen Balaban

BERT is Google's pre-training language representations which obtained the state-of-the-art results on a wide range of Natural Language Processing tasks. ...

Published on by Chuan Li

Create a cloud account instantly to spin up GPUs today or contact us to secure a long-term contract for thousands of GPUs