Train & fine-tune models on a GPU cloud built for AI workloads

NVIDIA GPUs

Featuring access to NVIDIA H100, A100 & A10 Tensor Core GPUs. Additional instance types include NVIDIA RTX A6000, RTX 6000 & NVIDIA V100 Tensor Core GPU.

Multi-GPU instances

Train and fine-tune AI models across instance types that make sense for your workload & budget: 1x, 2x, 4x & 8x NVIDIA GPU instances available.

Lambda Cloud API

Launch, terminate and restart instances from an easy-to-use developer-friendly API. Find out more in our API developer docs.

GPU instances pre-configured for machine learning

One-click Jupyter access

Quickly connect to NVIDIA GPU instances directly from your browser.

Pre-installed with popular ML frameworks

Ubuntu, TensorFlow, PyTorch, NVIDIA CUDA, and NVIDIA cuDNN come ready to use with Lambda Stack.

| GPUs | VRAM / GPU | vCPUs | RAM | STORAGE | PRICE* |

|---|---|---|---|---|---|

| 8x NVIDIA H100 SXM New | 80 GB | 208 | 1800 GiB | 26 TiB SSD | $27.92 / hr |

| 1x NVIDIA H100 PCIe New | 80 GB | 26 | 200 GiB | 1 TiB SSD | $2.49 / hr |

| 8x NVIDIA A100 SXM | 80 GB | 240 | 1800 GiB | 20 TiB SSD | $14.32 / hr |

| 8x NVIDIA A100 SXM | 40 GB | 124 | 1800 GiB | 6 TiB SSD | $10.32 / hr |

| 1x NVIDIA A100 SXM | 40 GB | 30 | 200 GiB | 512 GiB SSD | $1.29 / hr |

| 4x NVIDIA A100 PCIe | 40 GB | 120 | 800 GiB | 1 TiB SSD | $5.16 / hr |

| 2x NVIDIA A100 PCIe | 40 GB | 60 | 400 GiB | 1 TiB SSD | $2.58 / hr |

| 1x NVIDIA A100 PCIe | 40 GB | 30 | 200 GiB | 512 GiB SSD | $1.29 / hr |

| 1x NVIDIA A10 | 24 GB | 30 | 200 GiB | 1.4 TiB SSD | $0.75 / hr |

| 4x NVIDIA A6000 | 48 GB | 56 | 400 GiB | 1 TiB SSD | $3.20 / hr |

| 2x NVIDIA A6000 | 48 GB | 28 | 200 GiB | 1 TiB SSD | $1.60 / hr |

| 1x NVIDIA A6000 | 48 GB | 14 | 100 GiB | 200 GiB SSD | $0.80 / hr |

| 8x NVIDIA Tesla V100 | 16 GB | 92 | 448 GiB | 5.9 TiB SSD | $4.40 / hr |

| 1x NVIDIA Quadro RTX 6000 | 24 GB | 14 | 46 GiB | 512 GiB SSD | $0.50 / hr |

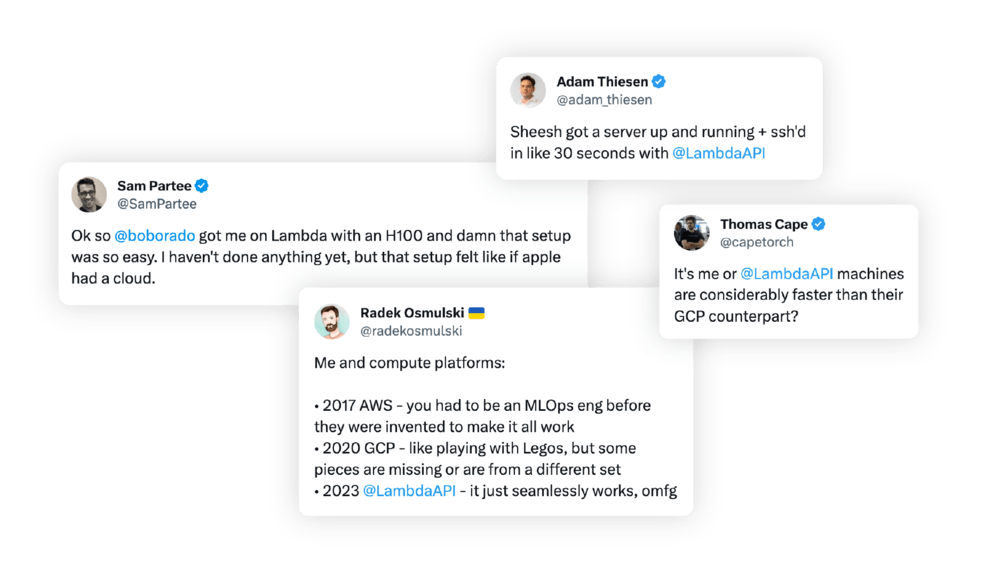

Trusted by thousands of AI developers

ML Engineers & Researchers love Lambda On-Demand Cloud for its simplicity, speed & ML-first user experience.

.png?width=175&height=185&name=h100-ai-every-scale-single-hgx-bf3-2631633%20(1).png)

NVIDIA H100s are now available on-demand

Lambda is one of the first cloud providers to make NVIDIA H100 Tensor Core GPUs available on-demand in a public cloud.

Starting at $2.49/GPU/Hour

High-speed filesystem for GPU instances

Create filesystems in Lambda On-Demand Cloud to persist files and data with your compute.

- Scalable performance: Adapts to growing storage needs without compromising speed.

- Cost-efficient: Only pay for the storage you use, optimizing budget allocation.

- No limitations: No ingress, no egress and no hard limit on how much you can store.

| STORAGE | RATE |

|---|---|

| Shared filesystems | $0.20 / GB / month* |

*plus applicable sales tax

Save over 68% on your cloud bill

Pay-by-the-second billing

Only pay for the time your instance is running.

Simple, transparent pricing

No hidden fees like data egress or ingress.

Introducing 1-Click Clusters

On-demand GPU clusters featuring NVIDIA H100 Tensor Core GPUs with Quantum-2 InfiniBand. No long-term contract required. Self-serve directly from the Lambda Cloud dashboard.

.gif?width=456&height=432&name=1cc_loop_short%20(1).gif)

Lambda Reserved Cloud

Guaranteed access to clusters in the cloud

GPUs, storage, and networking designed for AI workloads.

One, two & three year contracts

Flexible contract lengths starting at 1 year.

Payment options from 0-100% down

Control your $/GPU/hr price.

Reserved Cloud pricing

The best prices and value for GPU cloud clusters in the industry

| Instance type | GPU | GPU Memory | vCPUs | Storage | Network Bandwidth | Per Hour Price | Term | # of GPUs | |

|---|---|---|---|---|---|---|---|---|---|

| NVIDIA H100 | 8x NVIDIA H100 | H100 SXM | 80 GB | 224 | 30 TB local per 8x H100 | 3200 Gbps per 8x H100 | $1.89/H100/hour | 3 years | 64 - 32,000 |

| NVIDIA H200 | 8x NVIDIA H200 | H200 SXM | 141 GB | 224 | 30 TB local per 8x H200 | 3200 Gbps per 8x H200 | Contact Sales | 3 years | 64 - 32,000 |

| NVIDIA GH200 | 1x NVIDIA GH200 | GH200 Superchip | 96 GB | 72 | 30 TB local per GH200 | 400 Gbps per GH200 | $5.99 /GH200/hour | 3-12 months | 10 or 20 |