Text Generation: Char-RNN Data preparation and TensorFlow implementation

This tutorial is about making a character-based text generator using a simple two-layer LSTM. It will walk you through the data preparation and the network ...

This tutorial is about making a character-based text generator using a simple two-layer LSTM. It will walk you through the data preparation and the network ...

Published on by Chuan Li

BERT is Google's pre-training language representations which obtained the state-of-the-art results on a wide range of Natural Language Processing tasks. ...

Published on by Chuan Li

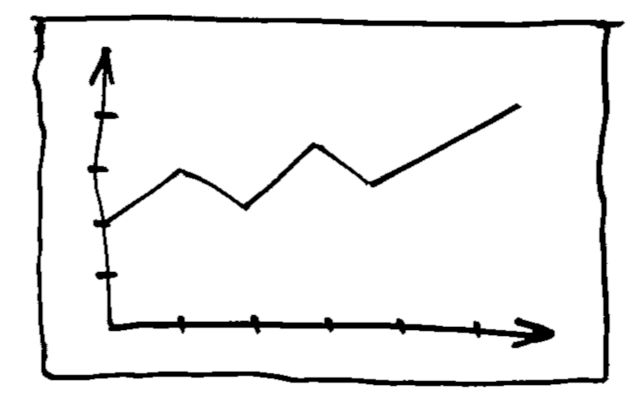

Last, tyear, Fast.ai won the first ImageNet training cost challenge as part of the DAWN benchmark. Their customized ResNet50 takes 3.27 hours to reach 93% ...

Published on by Chuan Li

Create a cloud account instantly to spin up GPUs today or contact us to secure a long-term contract for thousands of GPUs