The Ultimate GPU Server for Deep Learning

Join the waitlist for NVIDIA H200 NVL GPUs

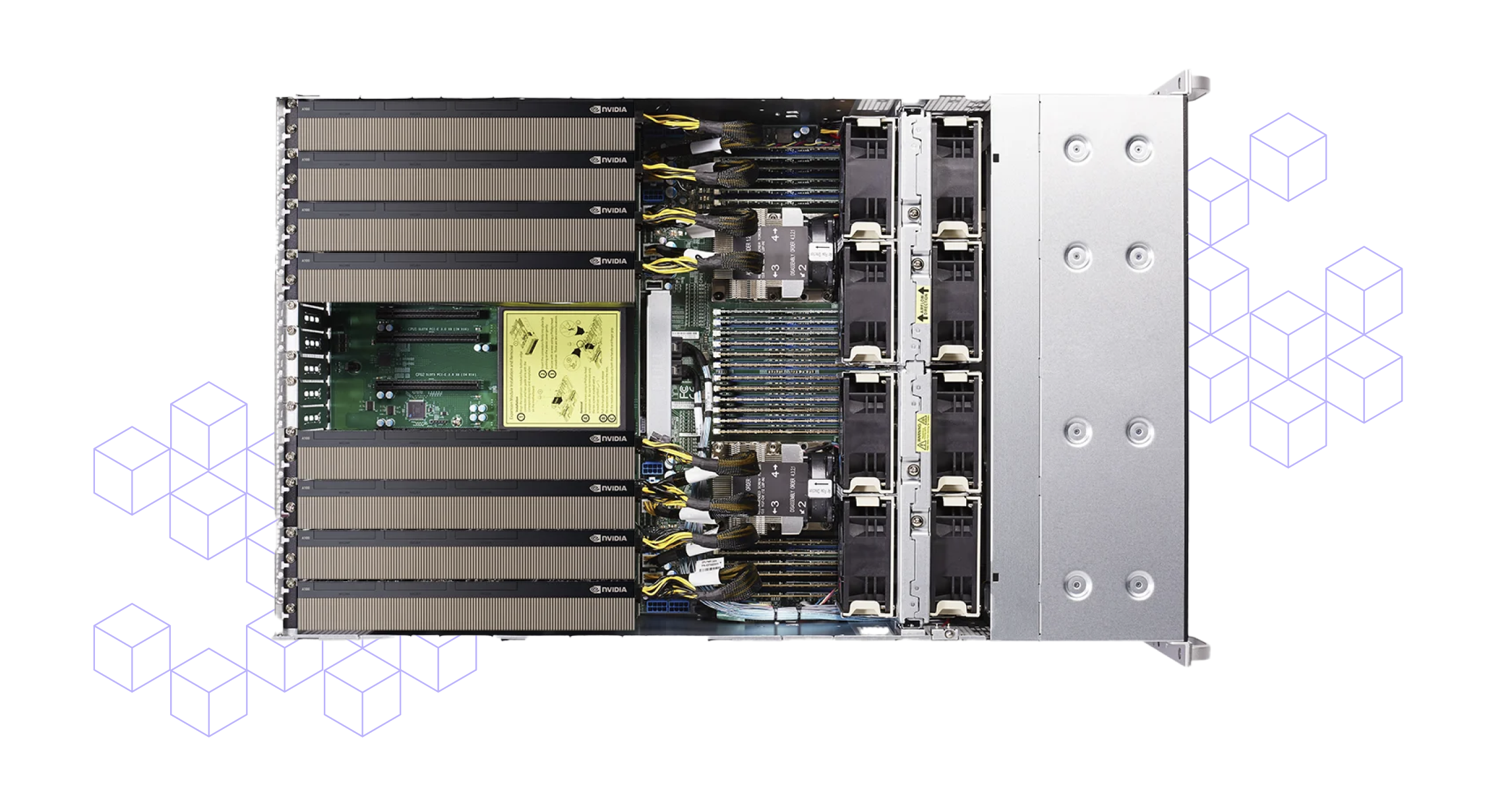

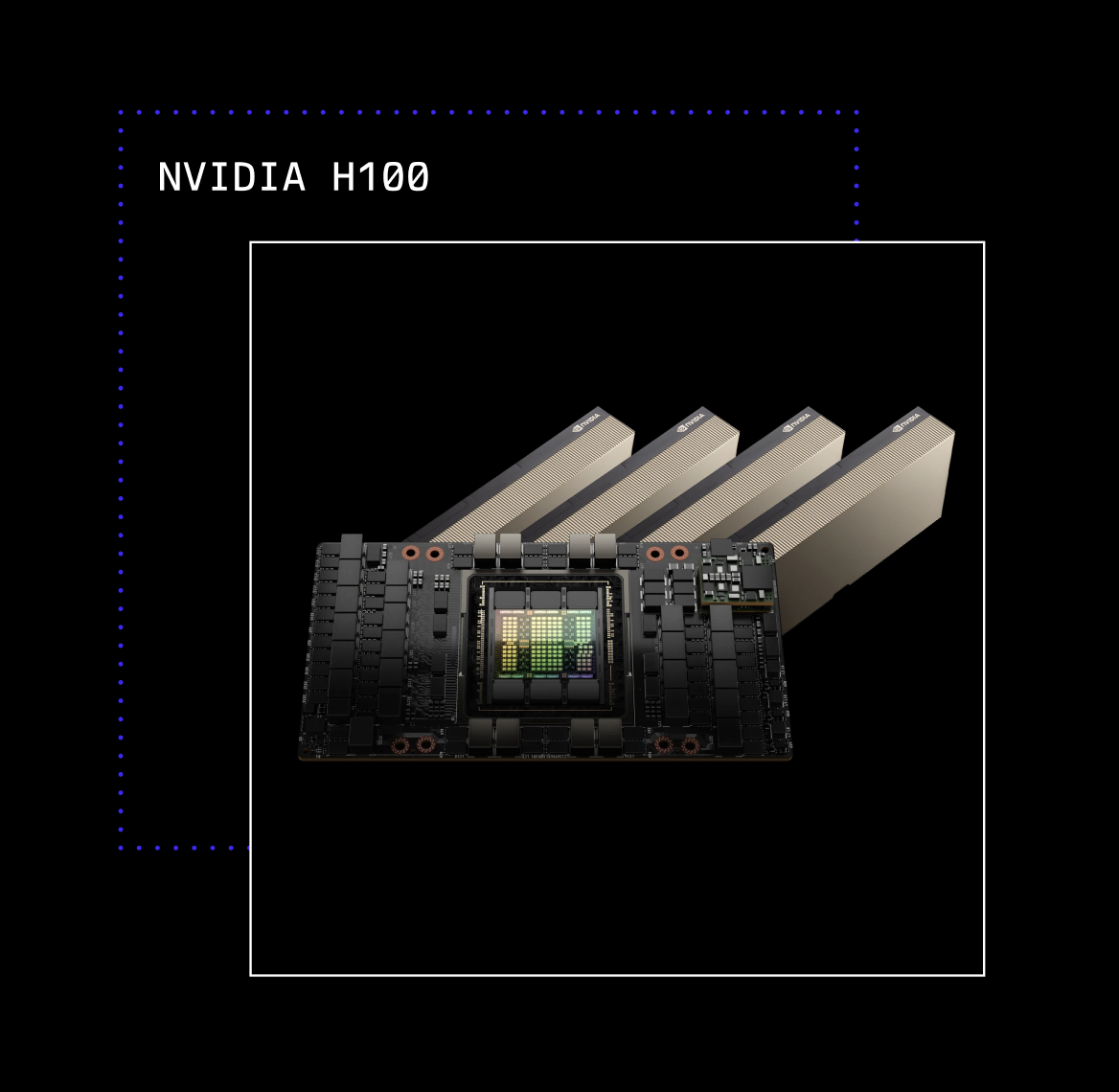

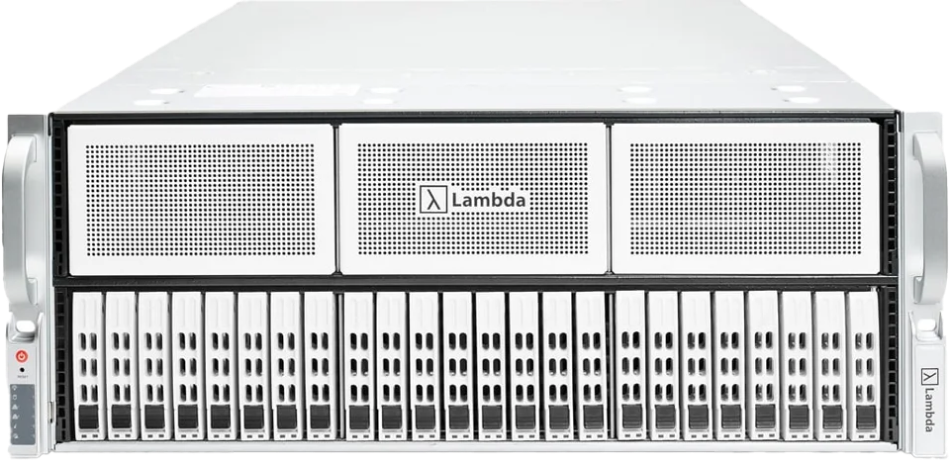

Lambda Scalar powered by NVIDIA H100 NVL GPUs

Engineered for your workload

Tell us about your research and we'll design a machine that's perfectly tailored to your needs.

8

GPUs from NVIDIA

192

cores and 384 threads

8192

GB of memory

253

TB of NVMe SSDs

Easily scale from server to cluster

As your team's compute needs grow, Lambda's in-house HPC engineers and AI researchers can help you integrate Scalar and Hyperplane servers into GPU clusters designed for deep learning.

-

ComputeScaling to 1000s of GPUs for distributed training or hyperparameter optimization.

-

StorageHigh-performance parallel file systems optimized for ML.

-

NetworkingCompute and storage fabrics for GPUDirect RDMA and GPUDirect Storage.

-

SoftwareFully integrated software stack for MLOps and cluster management.

Service and support by technical experts who specialize in machine learning

Lambda Premium Support includes:

-

Up to 5 year extended warranty with advanced parts replacement

-

Live technical support from Lambda's team of ML engineers

-

Support for ML software included in Lambda Stack: PyTorch®, Tensorflow, CUDA, CuDNN, and NVIDIA Drivers

Plug in. Start training.

Our servers include Lambda Stack, which manages frameworks like PyTorch® and TensorFlow. With Lambda Stack, you can stop worrying about broken GPU drivers and focus on your research.

-

Zero configuration requiredAll your favorite frameworks come pre-installed.

-

Easily upgrade PyTorch® and TensorFlowWhen a new version is released, just run a simple upgrade command.

-

No more broken GPU driversDrivers will "just work" and keep compatible with popular frameworks.

Your servers. Our data center.

Lambda Colocation makes it easy to deploy and scale your machine learning infrastructure. We'll manage racking, networking, power, cooling, hardware failures, and physical security. Your servers will run in a Tier 3 data center with state-of-the-art cooling that's designed for GPUs. You'll get remote access to your servers, just like a public cloud.

Fast support

If hardware fails, our on-premise data center engineers can quickly debug and replace parts.

Optimal performance

Our state-of-the-art cooling keeps your GPUs cool to maximize performance and longevity.

High availability

Our Tier 3 data center has redundant power and cooling to ensure your servers stay online.

No network set up

We handle all network configuration and provide you with remote access to your servers.

Explore our research

ICCV 2021

Multiple Pairwise Ranking Networks for Personalized Video Summarization

We propose a model for personalized video summaries by conditioning the summarization process with predefined categorical labels.

Learn moreICCV 2019

HoloGAN: Unsupervised Learning of 3D Reps. from Natural Images

We propose a novel generative adversarial network (GAN) for the task of unsupervised learning of 3D representations from natural images.

Learn moreNeurIPS 2018

RenderNet: A Deep ConvNet for Differentiable Rendering from 3D Shapes

We present a differentiable rendering convolutional network with a novel projection unit that can render 2D images from 3D shapes.

Learn moreSIGGRAPH Asia 2019

Adversarial Monte Carlo Denoising with Conditioned Aux. Feature Modulation

We demonstrate that GANs can help denoiser networks produce more realistic high-frequency details and global illumination.

Learn moreTechnical Specifications

GPUs

Up to 8 dual-slot PCIe GPUs. Options include:

- NVIDIA H100 NVL: 94 GB of HBM3, 14,592 CUDA cores, 456 Tensor Cores, PCIe 5.0 x16

- NVIDIA L40S: 48 GB of GDDR6, 18,176 CUDA cores, 568 Tensor Cores, PCIe 4.0 x16

- NVIDIA RTX 6000 Ada Generation: 48 GB of GDDR6, 18,176 CUDA cores, 568 Tensor Cores, PCIe 4.0 x16

- NVIDIA RTX 5000 Ada Generation: 32 GB of GDDR6, 12,800 CUDA cores, 400 Tensor Cores, PCIe 4.0 x16

- NVIDIA RTX 4500 Ada Generation: 24 GB of GDDR6, 7,680 CUDA cores, 240 Tensor Cores, PCIe 4.0 x16

- NVIDIA RTX 4000 Ada Generation: 16 GB of GDDR6, 6,144 CUDA cores, 192 Tensor Cores, PCIe 4.0 x16

Processors

2 AMD EPYC or Intel Xeon Processors...

- AMD EPYC 7004 (Genoa) Series Processors with up to 192 cores total

- Intel Xeon 4th Gen (Sapphire Rapids) Scalable Processors with up to 112 cores total

System Memory

Up to 8 TB of 4800 MHz DDR5 ECC RAM in 32 DIMM slots

- Up to 8 TB of 4800 MHz DDR5 ECC RAM in 32 DIMM slots

Storage

Up to 491.52 TB of storage via 16 hot-swappable U.2 NVMe SSDs...

- Up to 491.52 TB of storage via 16 hot-swappable U.2 NVMe SSDs

- Up to 61.44 TB of storage via 8 hot-swappable 2.5" SATA SSDs

Networking

2 RJ45 10 Gbps BASE-T LAN ports...

Built-in networking:

- 2 RJ45 10 Gbps BASE-T LAN ports

- 1 RJ45 1 Gbps BASE-T LAN out-of-band management port

Optional high-speed NIC. Options include:

- NVIDIA ConnectX-7 400 Gb/s NDR InfiniBand Adapter, OSFP56, PCIe 5.0 x16

- NVIDIA ConnectX-7 200 Gb/s NDR200 InfiniBand Adapter, OSFP56, PCIe 5.0 x16

- NVIDIA ConnectX-7 200 Gb/s NDR200 InfiniBand/VPI Adapter, QSFP112, PCIe 5.0 x16

- NVIDIA ConnectX-6 200 Gb/s HDR InfiniBand/VPI Adapter, QSFP56, PCIe 4.0 x16

- NVIDIA ConnectX-6 100 Gb/s HDR100 InfiniBand/VPI Adapter, 1x QSFP56, PCIe 4.0 x16

- NVIDIA ConnectX-6 Dx EN 200 Gb/s Ethernet Adapter, QSFP56, PCIe 4.0 x16

- NVIDIA ConnectX-6 Dx EN 100 Gb/s Ethernet Adapter, QSFP56, PCIe 4.0 x16

- NVIDIA ConnectX-5 EN 100 Gb/s Ethernet Adapter, QSFP28, PCIe 3.0 x16

Power

4 hot-swappable 2000 watt 80 PLUS Titanium PSUs...

- 4 hot-swappable 2000 watt 80 PLUS Titanium PSUs

- 2 + 2 redundancy

- 220-240 Vac / 10-9.8A / 50-60 Hz

- 220-240Vac / 2000 watts

Front I/O

Power button...

- Power button

- Reset button

- Power LED

- ID button with LED

- Information LED

- Power supply failure LED

- 2 LAN activity LEDs, one for each RJ45 1

- Gbps BASE-T LAN port

Storage drive activity LED

Rear I/O

VGA port...

- VGA port

- 2 USB 3.0 ports

- 2 RJ45 10 Gbps BASE-T LAN ports

- 1 RJ45 1 Gbps BASE-T LAN out-of-band management port

Accessories

Rackmounting kit...

- Rackmounting kit

- 4 configurable C19 power cables

Physical

Form factor: 4U rackmount...

- Form factor: 4U rackmount

- Width: 17.2 inches (437 mm)

- Height: 7.0 inches (179 mm)

- Depth: 29.0 inches (737 mm)

- Server weight: 73 lbs (33 kg)

- Rackmounting kit weight: 5 lbs (2.3 kg)

- Total weight with packaging: 99 lbs (45 kg)