Lambda Private Cloud

with NVIDIA DGX

Leverage AI-ready data centers to host NVIDIA DGX systems

NVIDIA DGX SuperPOD™

with Blackwell GPUs

NVIDIA DGX™ B200 and NVIDIA DGX SuperPOD™ with DGX GB200 systems are coming soon from Lambda. NVIDIA GB200 Grace Blackwell Superchips combine two Blackwell GPUs and one NVIDIA Grace CPU for 30X faster real-time LLM inference and 4X faster training performance than the NVIDIA Hopper architecture generation.

Own the best from NVIDIA

NVIDIA DGX™ GB200

Rack-scale architecture with 72 Blackwell GPUs for trillion-parameter training and inference.

NVIDIA DGX™ B200

Next-generation performance with 8

Blackwell GPUs for diverse ML workloads.

Blackwell GPUs for diverse ML workloads.

NVIDIA DGX™ H100

The proven choice for today's ML

infrastructure with 8 Hopper GPUs.

infrastructure with 8 Hopper GPUs.

Achieve peace of mind when standing up AI infrastructure with NVIDIA AI Enterprise

AI application frameworks, AI development tools and microservices, optimized to run on DGX systems. Your ML Engineers will get hundreds of head-starts on AI models and tools for training, tuning, and deploying your AI solutions. NVIDIA AI Enterprise is supported directly by NVIDIA's AI experts for faster time to results and maximum uptime.

Get the best of NVIDIA DGX with design, installation, hosting, and support from Lambda

Lambda is published at NeurIPS, ICCV & Siggraph and operates one of the largest fleets of NVIDIA GPUs in the world. We’ve used NVIDIA DGX systems to produce groundbreaking AI research and help companies design, scale, deploy, and support.

Lambda expertise

Get the tailored solution you need for optimal ML performance.

Rapid deployment

Sales, Design, and Delivery timelines that will exceed your expectations.

Flexible delivery

Choose between Lambda-hosted clusters or on-premises deployment in your datacenter.

Design & Deploy

Our datacenter

Leverage proven ML infrastructure in Lambda's secure data halls with VPN access, high-bandwidth internet access, and cloud direct connect. Learn more.

Your datacenter

Control every aspect of your ML infrastructure with an on-prem deployment in your data center. Installed by NVIDIA and Lambda engineers with expertise in large-scale DGX infrastructure.

Secure the latest GPUs without getting locked into the hardware forever

Lambda offers NVIDIA lifecycle management services to make sure your DGX investment is always at the leading edge of NVIDIA architectures. Deploy now using today's best solution and be one of the first to transition to the next generation. NVIDIA and Lambda engineers manage the entire upgrade and scaling process for seamless transitions.

Lambda is proud to be an NVIDIA Elite DGX AI Compute Systems Partner

Lambda has been awarded NVIDIA's 2024 Americas AI Excellence Partner of the Year, marking our fourth consecutive year as an NVIDIA Partner Of The Year.

“Leading enterprises recognize the incredible capabilities of AI and are building it into their operations to transform customer service, sales, operations, and many other key functions. Lambda’s deep expertise, combined with cutting-edge NVIDIA technology, is helping customers create flexible, scalable AI deployments on premises, in the cloud, or at a colocation data center.”Craig Weinstein, Vice President of the Americas Partner Organization

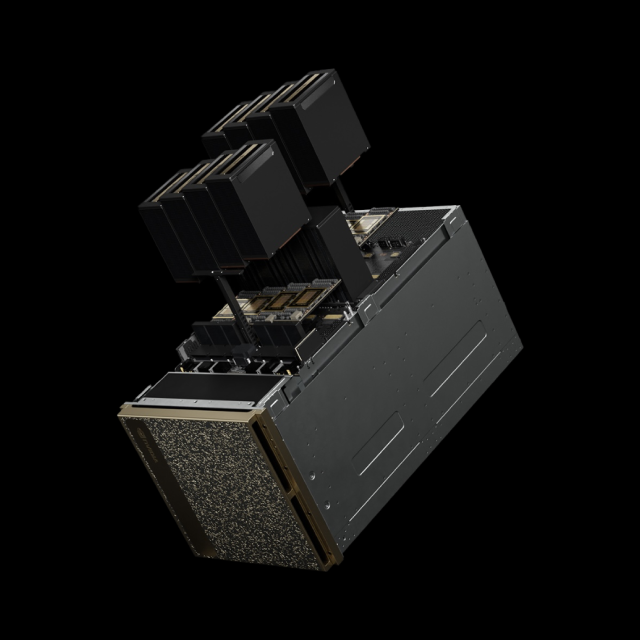

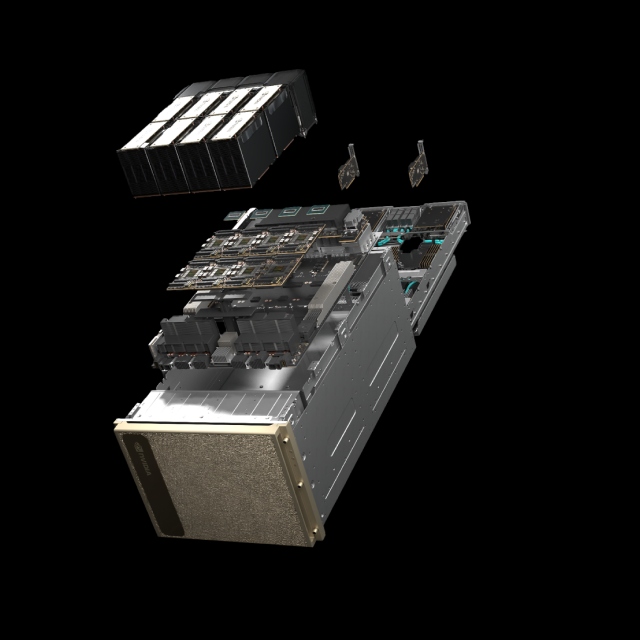

NVIDIA DGX™ System

Start with a single NVIDIA DGX B200 or H100 system with 8 GPUs. The DGX is a unified AI platform for every stage of the AI pipeline, from training to fine-tuning to inference.

- 10U system with 8x NVIDIA B200 Tensor Core GPUs

- 8U system with 8 x NVIDIA H100 Tensor Core GPUs

- NVLink and NVSwitch fabric for high-speed GPU to GPU communication

- Equipped with ConnectX-7 400Gb network interfaces for BasePOD or SuperPOD scaling

- Includes NVIDIA AI Enterprise and direct support from NVIDIA

NVIDIA DGX BasePOD™ and SuperPOD™

Scale from two to thousands of interconnected DGX systems with optimized networking, storage, management, and software platforms all supported by NVIDIA and Lambda.

- NVIDIA GB200, B200 or H100 Tensor Core GPUs

- From single rack to full data center scale

- NVIDIA Quantum-2 InfiniBand compute fabric

- Highest performance parallel storage solutions

- Design, installation, hosting, and support from NVIDIA and Lambda