Lambda Scalar with NVIDIA H200 NVL GPUs

Take advantage of:

- Enhanced Memory Capacity: The H200 NVL now offers 141 GB HBM3e memory, surpassing the H100 NVL’s 94 GB, delivering substantial upgrades for the most memory-intensive use cases.

-

Accelerated Data Throughput: Boosted peak memory bandwidth from 3.9 TB/s to 4.8 TB/s for faster data access and processing.

-

Improved NVLink Support: Supports both 4-way and 2-way NVLink configurations, allowing faster GPU-to-GPU communication across a larger group of GPUs.

- Massive VRAM Scalability: Scale up to 8 GPUs per Scalar system, achieving a total of 1,128 GB VRAM—ideal for demanding AI and machine learning workloads.

Join the waitlist

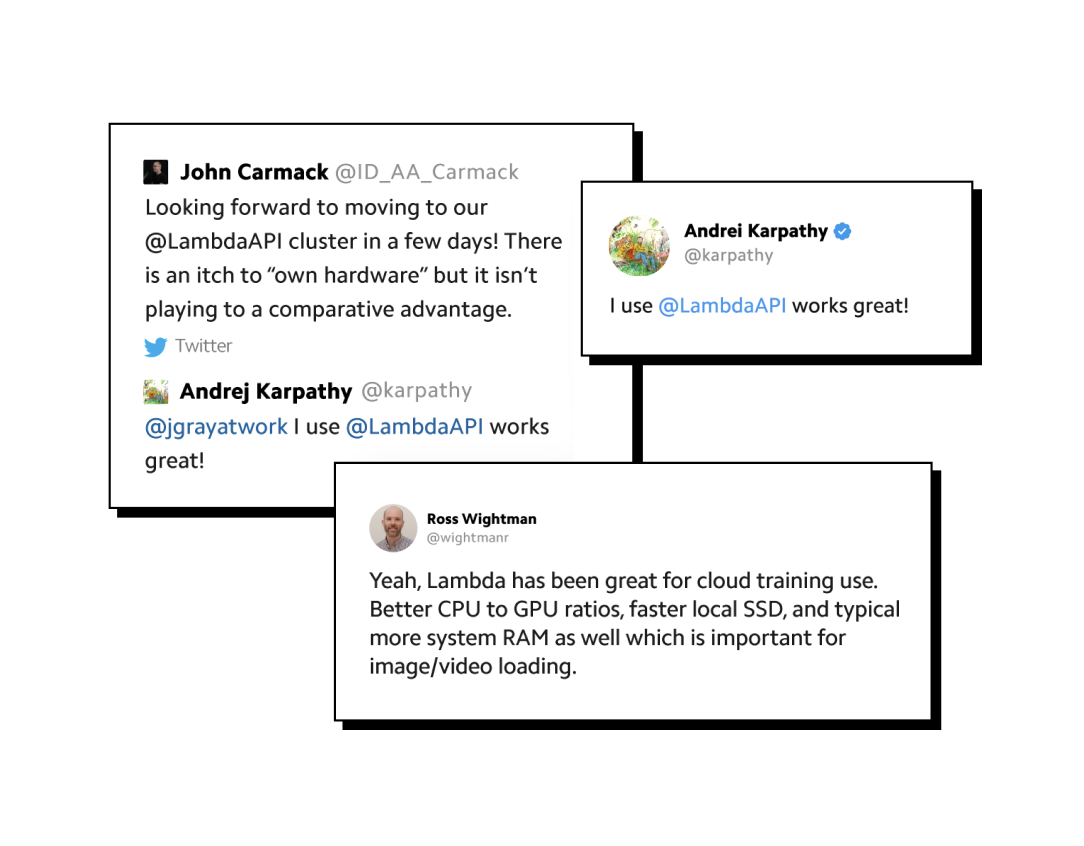

Trusted By 1000’s of Enterprises and Research centers

Join thousands of enterprise and research teams globally that rely on Lambda for AI compute.

Looking for something else?

Lambda's GPU Cloud is trusted by industry pioneers who have helped shape modern AI.