NVIDIA H100 & H200 Tensor Core GPUs

The NVIDIA H100 and NVIDIA H200 are the world's most powerful GPUs, based on the latest NVIDIA Hopper architecture, and available in PCIe and SXM form factors.

Lambda Cloud powered by

NVIDIA H100 & H200

Introducing

1-Click Clusters

On-demand GPU clusters featuring NVIDIA H100 Tensor Core GPUs with Quantum-2 InfiniBand. No long-term contract required. Self-serve directly from the Lambda Cloud dashboard.

NVIDIA H200 supercharges generative AI

As the first GPU with HBM3e, H200’s faster, larger memory fuels the acceleration of generative AI and LLMs while advancing scientific computing for HPC workloads.

Nearly double the GPU memory

The NVIDIA H200 GPU, with 141GB of HBM3e memory, nearly doubles capacity over the prior generation H100. The H200's increased GPU memory capacity allows larger models to be loaded into memory or larger batch sizes for more efficient training of massive LLMs.

Unmatched memory bandwidth

NVIDIA H200’s HBM3e memory bandwidth of 4.8TB/s is 1.4x faster than NVIDIA H100 with HBM3. Increased memory bandwidth and capacity is critical for the growing data sets and model sizes of today’s leading LLMs.

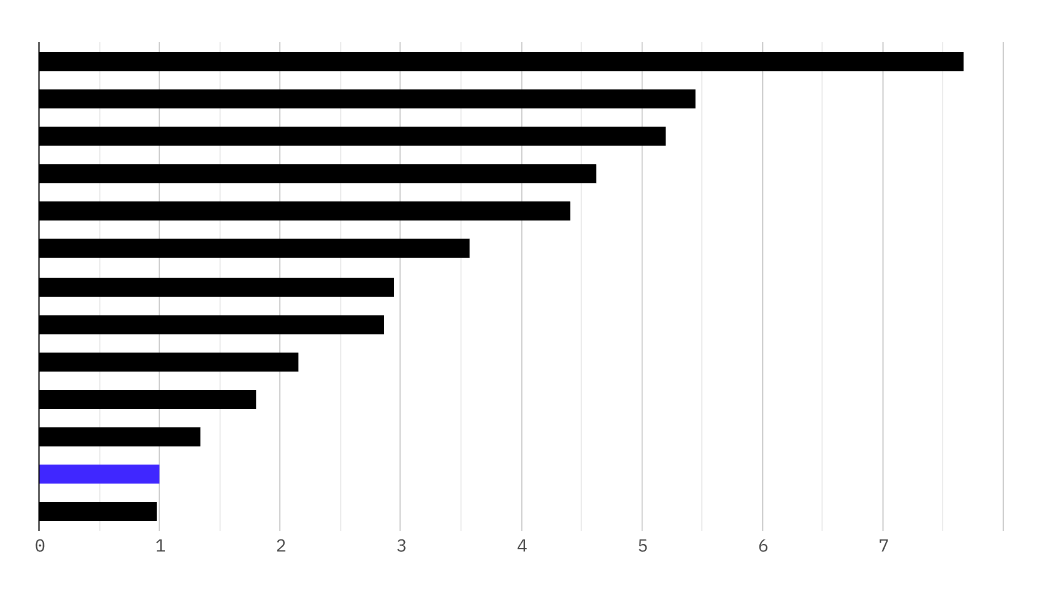

Lambda GPU comparisons

Lambda's GPU benchmarks for deep learning are run on more than a dozen different GPU types in multiple configurations. GPU performance is measured running models for computer vision (CV), natural language processing (NLP), text-to-speech (TTS), and more. Visit our benchmarks page to get started.

Tech Specs

|

.png.png?width=500&height=400&name=h100-ai-every-scale-single-hgx-bf3-2631633%20(1).png.png) |

|

|

| Model | H200 SXM | H100 SXM | H100 PCIe |

| GPU memory | 141GB HBM3e at 4.8 TB/s | 80GB HBM3 at 3.35 TB/s | 80GB HBM2e at 2 TB/s |

| Form Factor | 8-GPU SXM5 | 8-GPU SXM5 | PCIe Dual-slot |

| Interconnect | 900GB/s NVLink | 900GB/s NVLink | 600GB/s NVLink |

| Cloud Options | Lambda Private Cloud | Lambda On-Demand Cloud Lambda Private Cloud NVIDIA DGX Cloud |

Lambda On-Demand Cloud |

| Server options | NVIDIA DGX Server NVIDIA DGX SuperPOD |

Lambda Scalar | |

| NVIDIA AI Enterprise | Add-on | Add-on | Included |

Lambda On-Demand

Cloud powered by NVIDIA H100 GPUs

On-demand HGX H100 systems with 8x NVIDIA H100 SXM GPUs are now available on Lambda Cloud for only $2.99/hr/GPU. With H100 SXM you get:

- Flexibility for users looking for more compute power to build and fine-tune generative AI models

- Enhanced scalability

- High-bandwidth GPU-to-GPU communication

- Optimal performance density

Resources for deep learning

Explore Lambda's deep learning materials including blog, technical documentation, research and more. We've curated a diverse set of resources just for ML and AI professionals to help you on your journey.