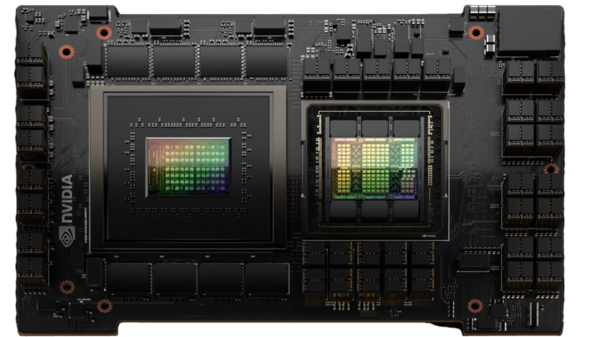

NVIDIA GH200 Grace Hopper™ Superchip

The NVIDIA GH200 Grace Hopper Superchip’s breakthrough design forms a high-bandwidth connection between the NVIDIA Grace™ CPU and Hopper™ GPU to enable the era of accelerated computing and generative AI. Now available on-demand, starting at $3.19/hour.

Lambda Private Cloud powered by NVIDIA GH200

Dedicated, bare metal, hosted clusters optimized for distributed training. A single GH200 has 576 GB of coherent memory for unmatched efficiency and price for the memory footprint. Reserve a cloud cluster with Lambda and be one of the first in the industry to train LLMs on the most versatile compute platform in the world, the NVIDIA GH200.

NVIDIA GH200 Grace Hopper Superchip

Up to 10X higher performance compared to NVIDIA A100 for applications running terabytes of data, helping scientists and researchers reach unprecedented solutions for the world’s most complex problems.

Power and efficiency with the Grace CPU

The NVIDIA Grace CPU was designed for high single-threaded performance, high-memory bandwidth, and outstanding data-movement capabilities. This design strikes the optimal balance of performance and energy efficiency.

Performance and speed with the NVIDIA GH200

GH200 will deliver up to 10x higher performance compared to NVIDIA A100 for applications running terabytes of data, helping scientists and researchers reach unprecedented solutions for the world's most complex problems.

The Power of Coherent Memory

NVIDIA GH200 delivers 7X the bandwidth between CPU and GPU typically found in accelerated systems. The connection provides unified cache coherence with a single memory address space that combines system and HBM GPU memory for simplified programmability.

NVIDIA GH200 tech specs

| CPU | GPU | CPU memory | GPU memory | NVLink-C2C bandwith |

| Grace 72-core CPU | H100 GPU | 480 GB LPDDR5X at 512 GB/s | 96 GB HBM3 at 4 TB/s | 900 GB/s |

Learn more about NVIDIA GH200 on Lambda

As AI and HPC demands grow, so does the need for scalable compute resources. The NVIDIA GH200 Grace Hopper Superchip on Lambda On-Demand Cloud provides a high-performance, cost-effective solution for AI/ML and HPC teams. Whether you’re overcoming memory constraints or bandwidth limits, the GH200 Superchip is ready to power your next big project.