GPU Clusters Designed for Deep Learning

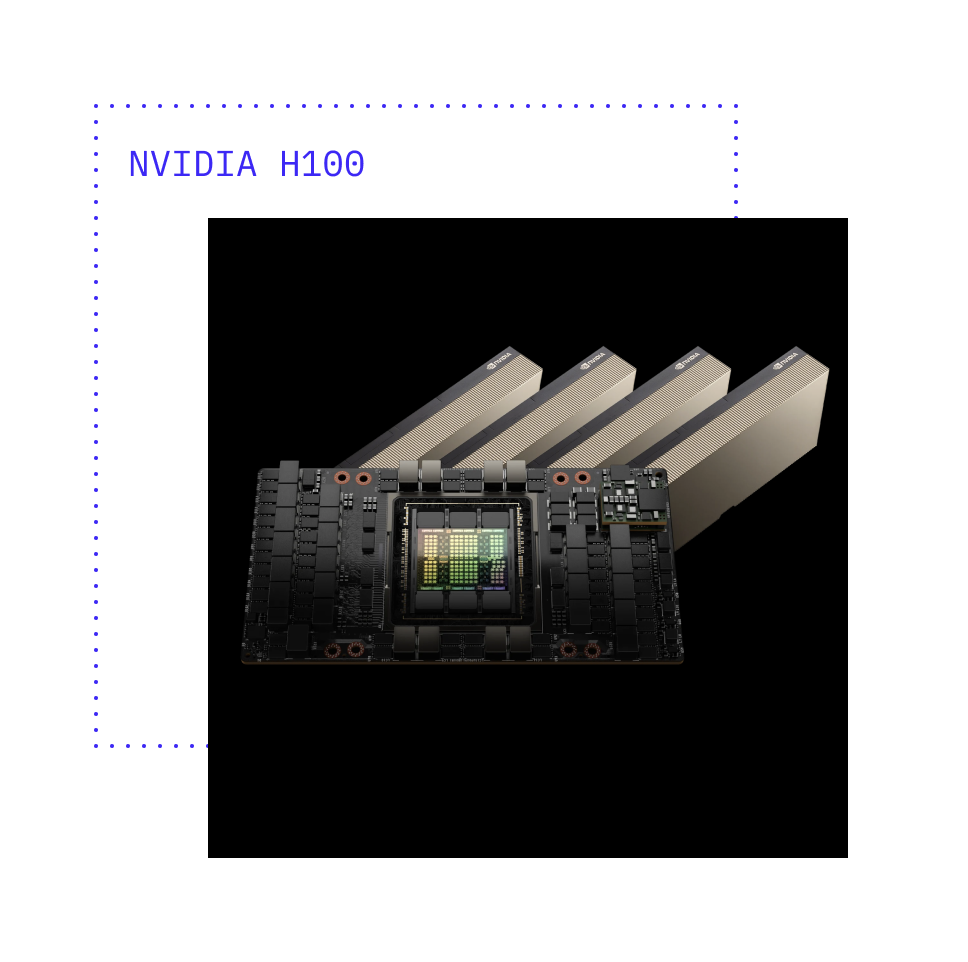

Lambda Echelon powered by NVIDIA H100 GPUs

Lambda Echelon GPU clusters

-

Pre-engineered and optimized for deep learning training

-

Fully-integrated compute, storage, networking and MLOps tools

-

Delivered in hours to weeks instead of months

-

End-to-end support from Lambda's IT & ML engineers

-

Lambda is the single vendor for your entire cluster: hardware, software, infrastructure, install, support, and expansion

Flexible deployment options

to meet your team's needs

Cloud: Reserve a fully-integrated cluster in Lambda's cloud

Available in minutes/hours.

Lambda Private Cloud provides dedicated GPU clusters with the same networking and storage as our on-prem and colo systems. Access can be granted in a matter of hours with contract lengths starting at six months.

Colocation: Your cluster installed in lambda's data center

Available in hours/days.

Deploy faster by leveraging Lambda’s data center infrastructure optimized for next-gen GPU clusters. Colo clusters are installed and supported on-site by Lambda’s engineering team, reducing downtime and allowing for easy upgrades and servicing.

On-prem: Your cluster installed in your data center

Available in days/weeks.

Echelon clusters are delivered fully racked, configured, and ready for Lambda to install in your data center. We work with your infrastructure and IT teams to comply with power, cooling, and integration requirements.

Engineered to scale from a single rack to an AI supercomputer

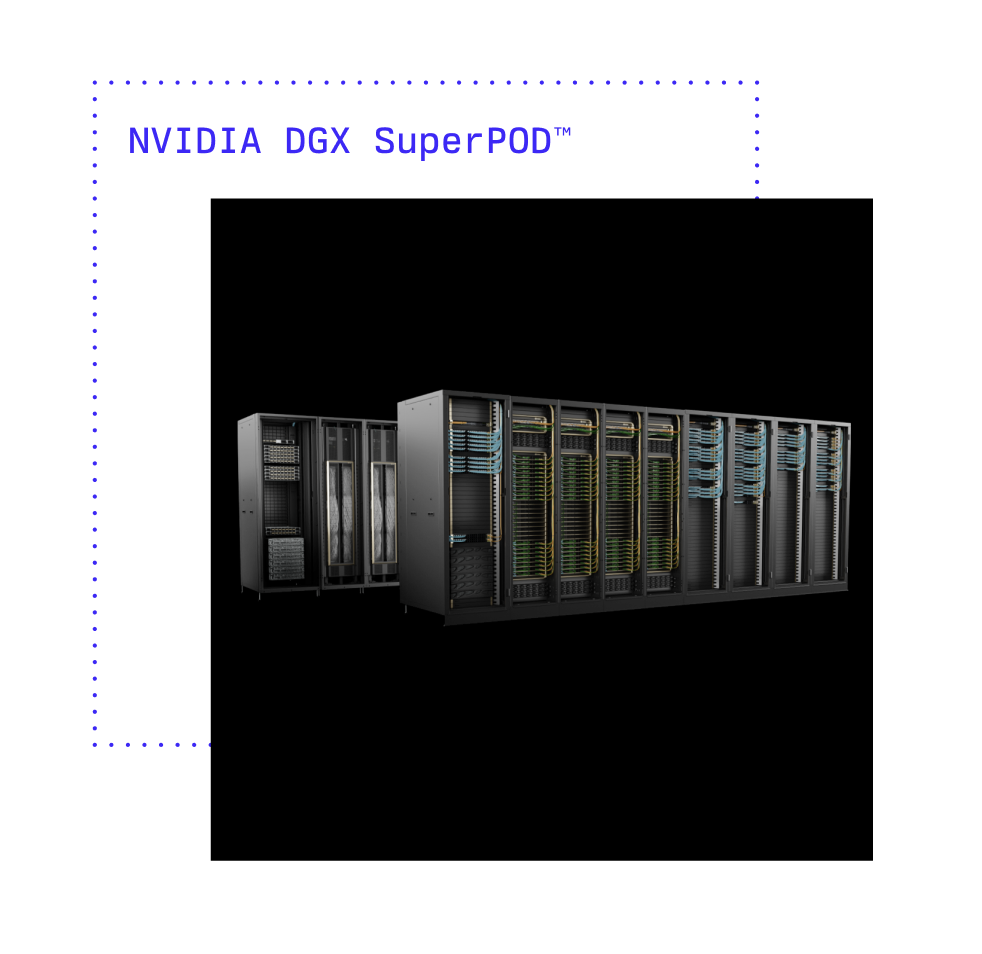

Echelon clusters come fully integrated with high-bandwidth networking, high-performance parallel storage, cluster management, and optional MLOps platforms. Designed with NVIDIA PCIe, HGX, or DGX GPU compute nodes to target your performance, workload, software, and budget requirements.

Lambda Scalar PCIe

Lambda Hyperplane HGX

NVIDIA DGX™ H100

Service and support by technical experts who specialize in machine learning

Lambda Premium Support includes:

-

Full stack support covering the compute, networking, storage, MLOPs and all supporting frameworks, software and drivers

-

Live technical support from Lambda's team of ML and supercomputing engineers with optional on-site services

-

Up to 5-year extended warranty with advanced parts replacement

-

Deep support from NVIDIA for DGX BasePOD and SuperPOD clusters

NVIDIA DGX SuperPOD™ with Lambda

Download the Echelon white paper to learn more