Get into the ARMs race: future-proof your workloads now with Lambda

Consult with us

Lambda is committed to smoothing your transition to the future. We're excited to announce that you can start testing your applications on the current generation NVIDIA GH200 Superchip, today. This chip offers the same powerful combination of ARM64 CPU and Tensor Core GPU (one H100, in GH200’s case) as the Grace Blackwell platform.

Our Machine Learning Research team has been actively exploring the capabilities of GH200. We're happy to share what we've learned to support your work.

Consult on ARM64

NVIDIA Blackwell Architecture

A New Class of AI Superchip

Blackwell GPUs pack 208 billion transistors and a 10 TB/s chip-to-chip interconnect, delivering unmatched performance for AI workloads.

Second-generation Transformer Engine

Custom Blackwell Tensor Core technology, with new precision formats and micro-tensor scaling, doubles model performance while maintaining high accuracy.

Confidential Computing for secure AI

Blackwell is the first TEE-I/O capable GPU, protecting sensitive data and AI models from unauthorized access without sacrificing on performance.

Fifth-generation NVIDIA NVLinkTM

The latest interconnect scales up to 576 GPUs, delivering 1.8 TB/s bandwidth between servers in a cluster for seamless multi-server communication.

Decompression Engine

Blackwell’s Decompression Engine leverages a 900 GB/s high-speed link to the NVIDIA Grace CPU, accelerating database queries for the highest performance in data science and analytics.

Reliability, Availability, and Serviceability (RAS) Engine

The RAS Engine continuously monitors system performance, proactively identifying issues and reducing downtime through effective diagnostics and remediation.

Why Lambda

NVIDIA GPUs simplified

Deploy scalable GPU compute quickly and easily.

Built for AI/ML

Our state-of-the-art cooling keeps your GPUs cool to maximize performance and longevity.

Early access advantage

Skip the infrastructure config and get right to training and inference.

Self-serve

Use the Lambda dashboard or API to spin up, manage, and monitor your usage—no expertise in infrastructure config required.

Pre-configured environments

No more setup hassles. We’ve pre-installed all the drivers and libraries. Just log in and start building your AI models.

Industry-leading partnerships

Be among the first to access the latest AI compute and research breakthroughs.

High-performance networking

Faster multi-node training with low-latency and high-bandwidth connectivity through NVIDIA Quantum-2 InfiniBand.

Seamless upgrades

Easily transition to the latest NVIDIA GPUs as they become available, without needing to rebuild your entire infrastructure.

24x7 support

Our world-class support team is there to help whenever you need us.

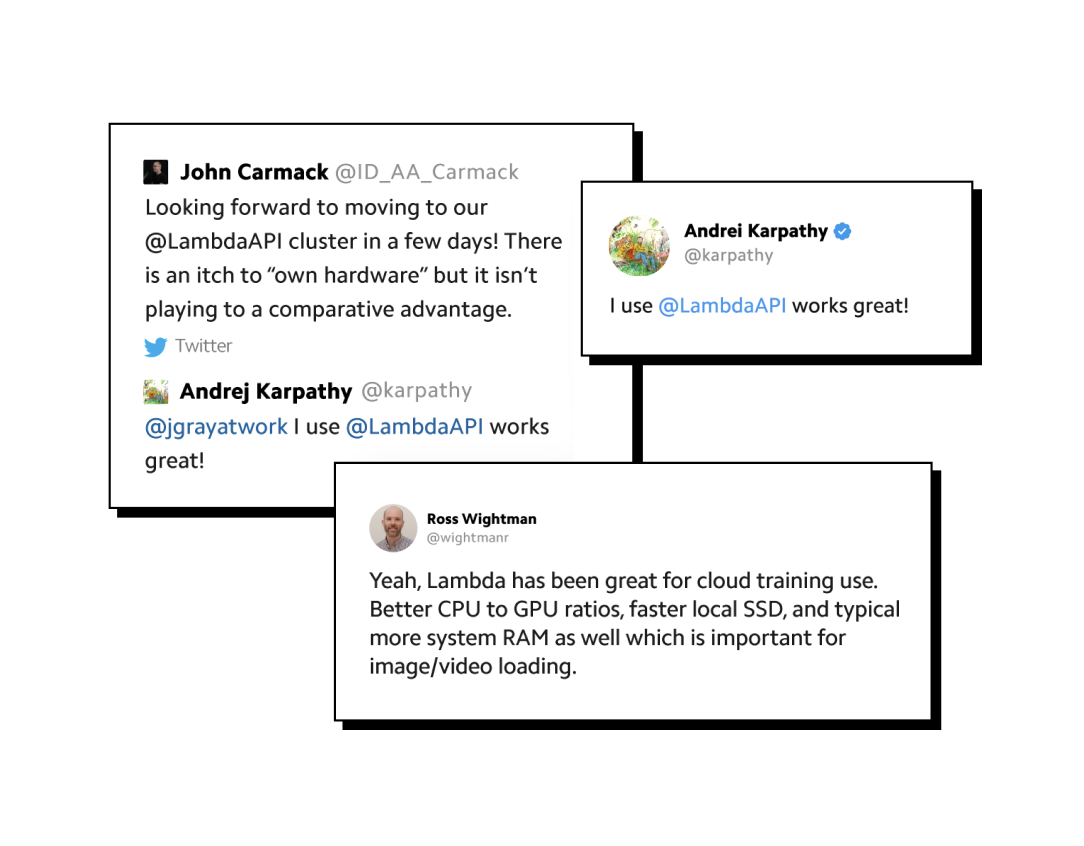

Trusted By 1000’s of Enterprises and Research centers

Looking for something else?