The

AI Developer Cloud

On-demand NVIDIA GPU instances & clusters for AI training & inference.

Join the ARMs race: GH200s for $1.49/hr until the end of March to help you get ARM64-ready. Learn more

The only Cloud focused on enabling AI developers

1-Click Clusters

On-demand GPU clusters featuring NVIDIA H100 Tensor Core GPUs with NVIDIA Quantum-2 InfiniBand. No long-term contract required.

On-Demand Instances

Spin up on-demand GPU Instances billed by the hour. NVIDIA H100 instances starting at $2.49/hr.

Private Cloud

Reserve thousands of NVIDIA H100s, H200s, GH200s, B200s and GB200s with Quantum-2 InfiniBand Networking.

The lowest-cost AI inference

Access the latest LLMs through a serverless API endpoint with no rate limits.

Get the most coveted and highest performing NVIDIA GPUs

NVIDIA H100

Lambda is one of the first cloud providers to make NVIDIA H100 Tensor Core GPUs available on-demand in a public cloud.

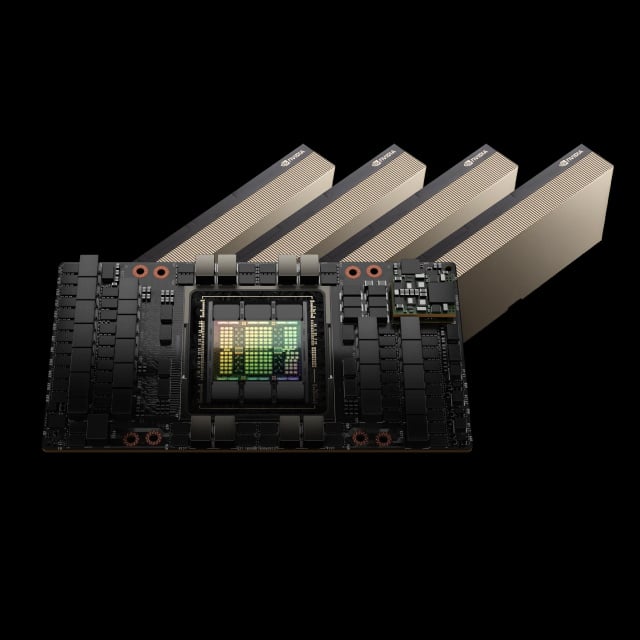

NVIDIA H200

Lambda Private Cloud is now available with the NVIDIA H200 Tensor Core GPU. H200 is packed with 141GB of HBM3e running at 4.8TB/s.

NVIDIA B200

%20Page/blackwell-b200-gtc24-content-600x600.png)

The NVIDIA B200 Tensor Core GPU is based on the latest Blackwell architecture with 180GB of HBM3e memory at 8TB/s.

Lambda Stack is used by more than 50k ML teams

One-line installation and managed upgrade path for: PyTorch®, TensorFlow, NVIDIA® CUDA®, NVIDIA CuDNN®, and NVIDIA Drivers

Ready to get started?

Create a cloud account instantly to spin up GPUs today or contact us to secure a long-term contract for thousands of GPUs