Benchmarks comparing inference performance of the NVIDIA GH200 Grace Hopper Superchip, enhanced by ZeRO-Inference, to NVIDIA H100 and A100 Tensor Core GPUs.

The Lambda Deep Learning Blog

Categories

- gpu-cloud (25)

- tutorials (24)

- benchmarks (22)

- announcements (19)

- lambda cloud (13)

- NVIDIA H100 (12)

- hardware (12)

- tensorflow (9)

- NVIDIA A100 (8)

- gpus (8)

- company (7)

- LLMs (6)

- deep learning (6)

- hyperplane (6)

- news (6)

- training (6)

- gpu clusters (5)

- CNNs (4)

- generative networks (4)

- presentation (4)

- research (4)

- rtx a6000 (4)

Recent Posts

Persistent storage is now available in all Lambda Cloud regions and for all on-demand instance types, including our NVIDIA H100 Tensor Core GPU instances.

Published 12/19/2023 by Kathy Bui

Benchmarks on NVIDIA’s Transformer Engine, which boosts FP8 performance by an impressive 60% on GPT3-style model testing on NVIDIA H100 Tensor Core GPUs.

Published 11/21/2023 by Chuan Li

GPU benchmarks on Lambda’s offering of the NVIDIA H100 SXM5 vs the NVIDIA A100 SXM4 using DeepChat’s 3-step training example.

Published 10/12/2023 by Chuan Li

Lambda has launched a new Hyperplane server combining the fastest GPU on the market, NVIDIA H100, with the world’s best data center CPU, AMD EPYC 9004.

Published 09/07/2023 by Maxx Garrison

How to use FlashAttention-2 on Lambda Cloud, including H100 vs A100 benchmark results for training GPT-3-style models using the new model.

Published 08/24/2023 by Chuan Li

On-demand HGX H100 systems with 8x NVIDIA H100 SXM instances are now available on Lambda Cloud for only $2.59/hr/GPU.

Published 08/02/2023 by Kathy Bui

How to build the GPU infrastructure needed to pretrain LLM and Generative AI models from scratch (e.g. GPT-4, LaMDA, LLaMA, BLOOM).

Published 07/13/2023 by David Hall

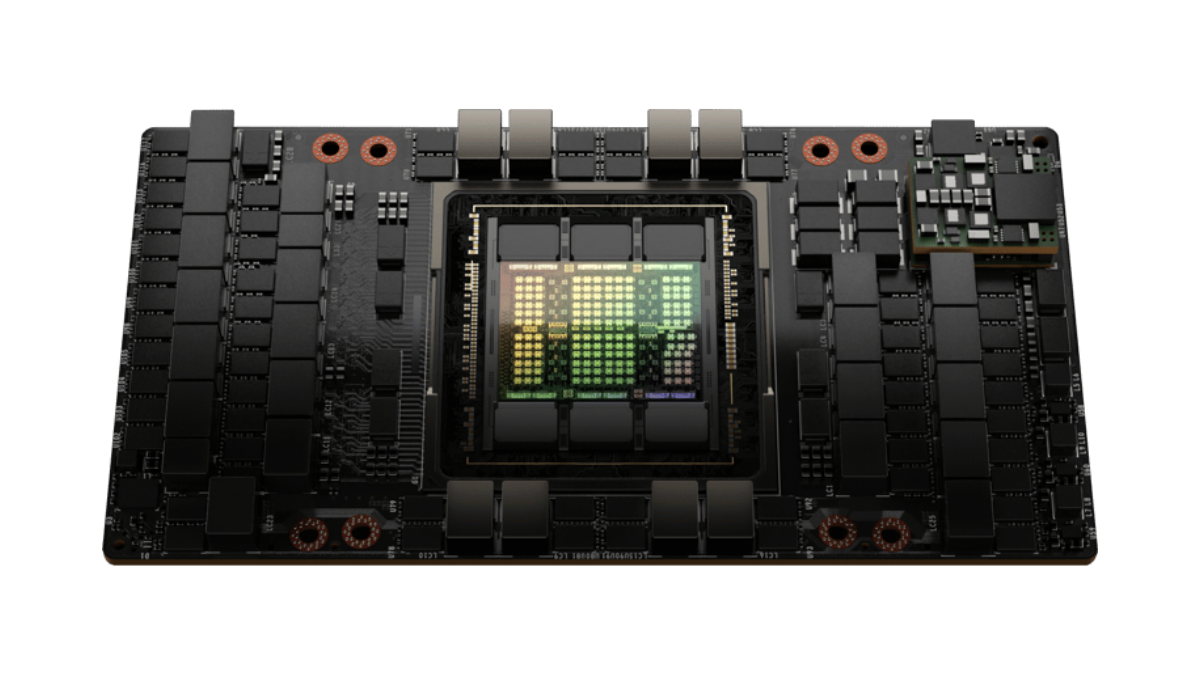

Lambda Cloud has deployed a fleet of NVIDIA H100 Tensor Core GPUs, making it one of the FIRST to market with general-availability, on-demand H100 GPUs. The high-performance GPUs enable faster training times, better model accuracy, and increased productivity.

Published 05/10/2023 by Kathy Bui

In early April, NVIDIA H100 Tensor Core GPUs, the fastest GPU type on the market, will be added to Lambda Cloud. NVIDIA H100 80GB PCIe Gen5 instances will go live first, with SXM to follow very shortly after.

Published 03/21/2023 by Mitesh Agrawal

Native support for FP8 data types is here with the release of the NVIDIA H100 Tensor Core GPU. These new FP8 types can speed up training and inference.

Published 12/07/2022 by Jeremy Hummel

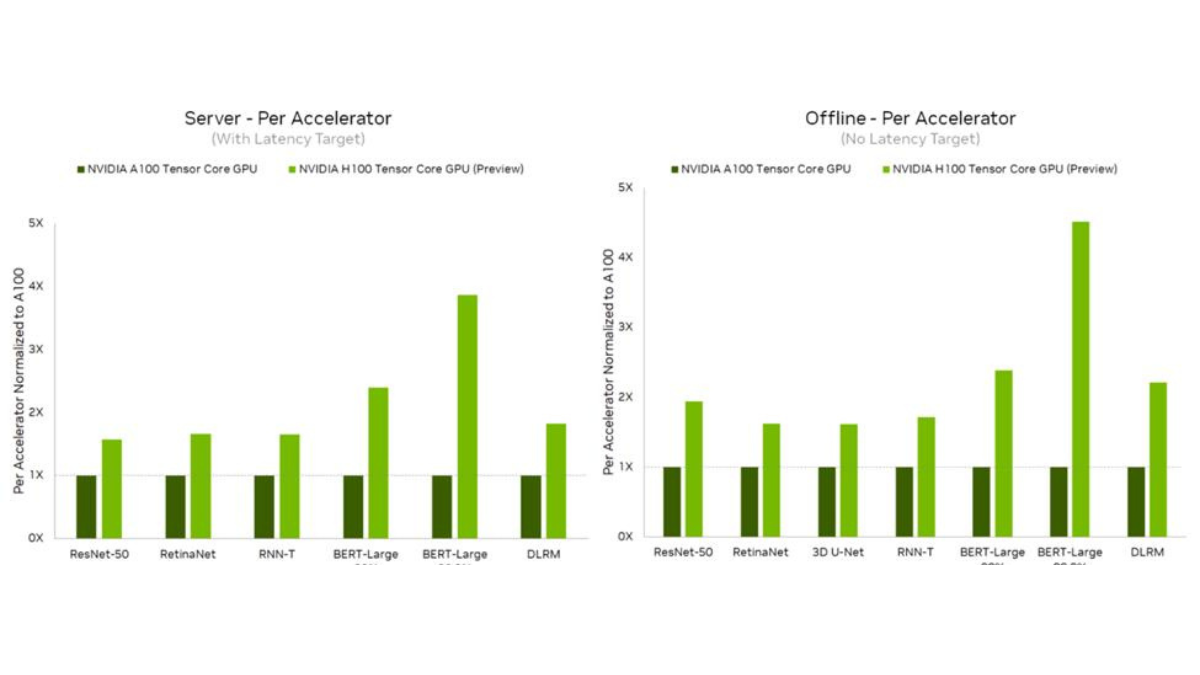

This article discusses the performance and scalability of H100 GPUs and the whys for upgrading your ML infrastructure with the H100 release from NVIDIA.

Published 10/05/2022 by Chuan Li