Lambda's Deep Learning Curriculum

This curriculum provides an overview of free online resources for learning about deep learning. It includes courses, books, and even important people to follow.

If you only want to do one thing, do this:

Train an MNIST network with PyTorch.

https://github.com/pytorch/examples/tree/master/mnist

Introductory

CS231n: Convolutional Neural Networks for Visual Recognition

CS231n Class Notes:

https://cs231n.github.io/

Introduction to Gradient Based Learning

https://www.iro.umontreal.ca/~pift6266/H10/notes/gradient.html

Fast.ai’s Online Courses:

Full Stack Deep Learning (Especially their infrastructure section.):

https://fullstackdeeplearning.com/

Deeper Dives:

Deep Learning Book - the definitive deep learning textbook (Goodfellow, Bengio, and Courville):

https://www.deeplearningbook.org

Recurrent Neural Networks - a very easy to understand research paper by Alex Graves:

http://arxiv.org/pdf/1308.0850v5.pdf

Convolutional Neural Networks:

http://deeplearning.net/tutorial/lenet.html

Deep Learning for NLP:

CS224d: Deep Learning for Natural Language Processing: http://cs224d.stanford.edu

Word2Vec: https://www.tensorflow.org/tutorials/text/word2vec

People:

Often, the easiest way to build a mental model of a field is to read the work of the important contributors in chronological order. This is a helpful list of important players in the field of deep learning.

Geoff Hinton (University of Toronto)

http://www.cs.toronto.edu/~hinton/

Andrew Ng (Stanford, Coursera, Baidu, Landing AI)

Worked with Jeff Dean + Hinton @ Google's "Google Brain Team"

http://cs.stanford.edu/people/ang/

Yann LeCun (Courant Institute NYU, now Meta) - Specializes in Convnets

http://yann.lecun.com/

Yoshua Bengio (University de Montreal) - I love his work and papers, very easy to read.

Keywords: manifold learning and representation learning.

http://www.iro.umontreal.ca/~bengioy/yoshua_en/index.html

Ian Goodfellow - invented GANs (yea)

https://scholar.google.ca/citations?user=iYN86KEAAAAJ&hl=en

Andrej Karpathy (Tesla’s AI head) - great work with CNNs

https://karpathy.ai

Alex Graves - great work with LSTMs, Neural Turing Machines, Differentiable Neural Computer

https://scholar.google.co.uk/citations?user=DaFHynwAAAAJ&hl=en

Oriol Vinyols - co-author of seq2seq learning and knowledge distillation

https://scholar.google.com/citations?user=NkzyCvUAAAAJ&hl=en

Early Deep Learning Papers I learned from:

To Recognize Shapes, First Learn to Generate Images

http://www.cs.toronto.edu/~fritz/absps/montrealTR.pdf

Practical recommendations for gradient-based training of deep architectures

http://arxiv.org/abs/1206.5533

Bengio's Representation Learning

http://arxiv.org/abs/1206.5538

Ng's Large Scale Learning Paper

http://ai.stanford.edu/~ang/papers/icml12-HighLevelFeaturesUsingUnsupervisedLearning.pdf

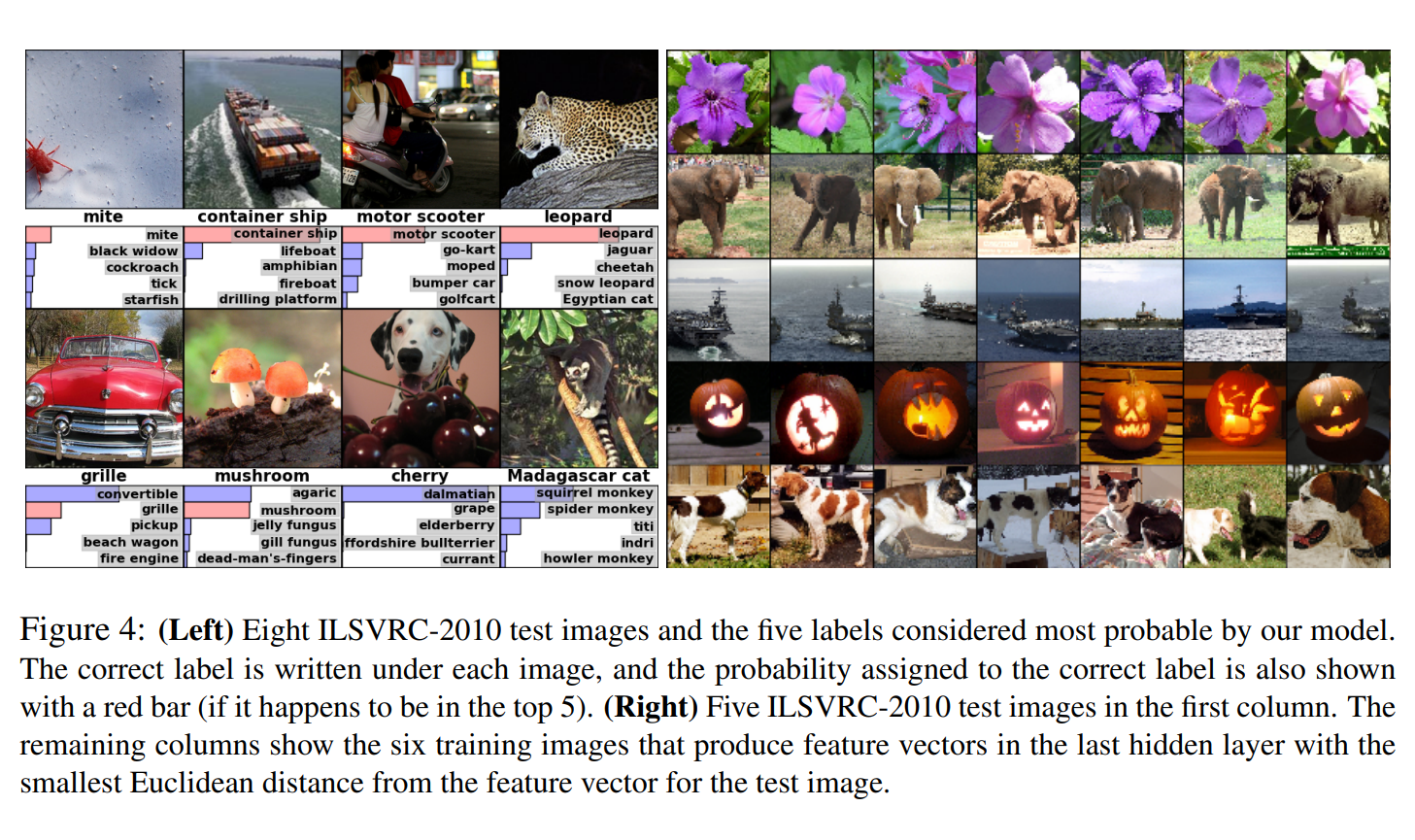

Thumbnail is Figure 4 from the original AlexNet paper: ImageNet Classification with Deep Convolutional Neural Networks.